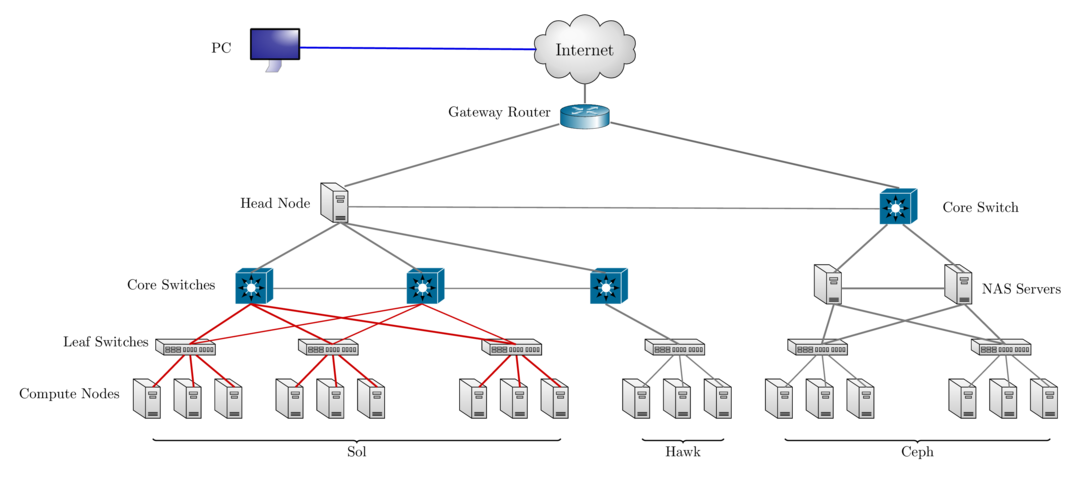

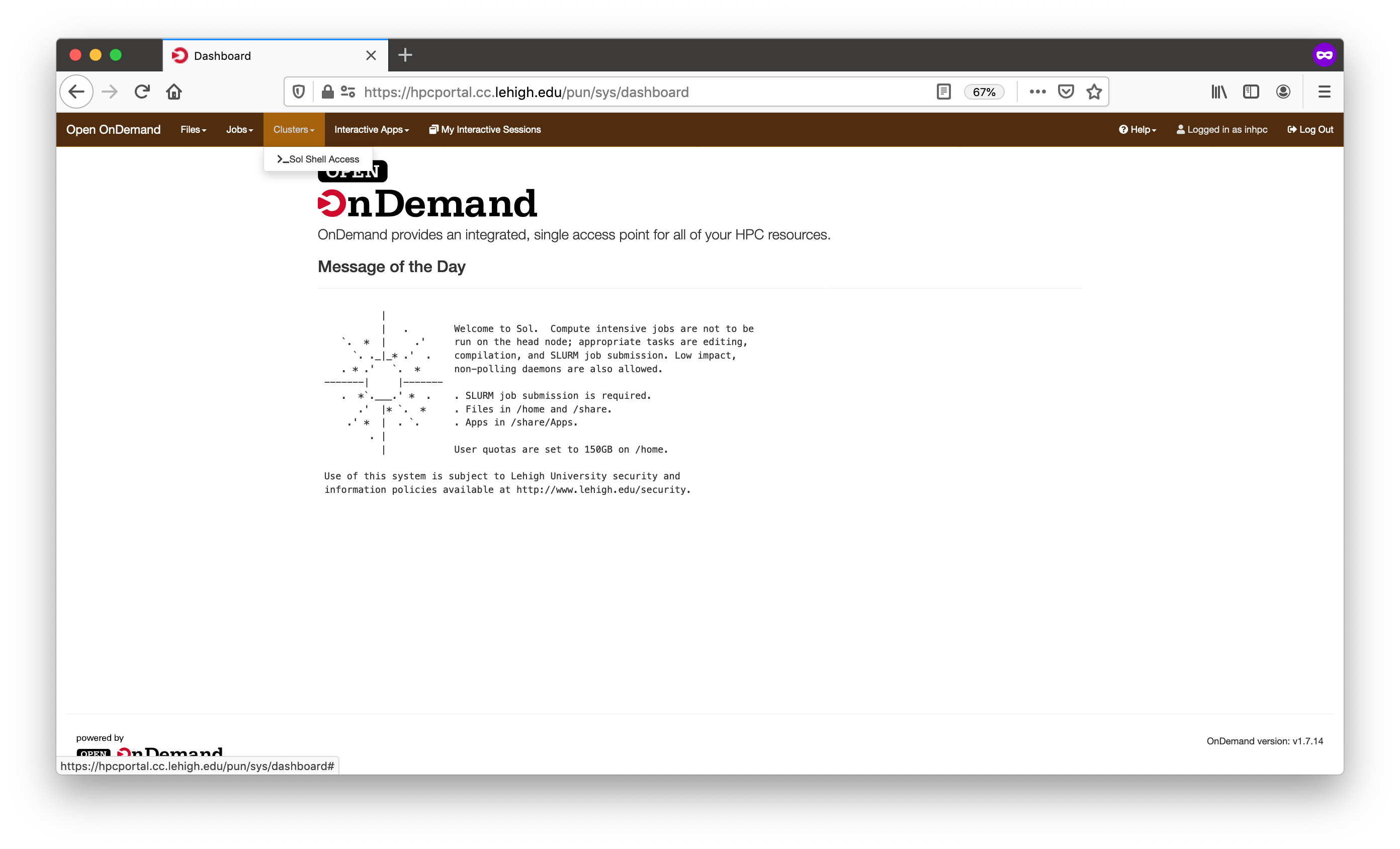

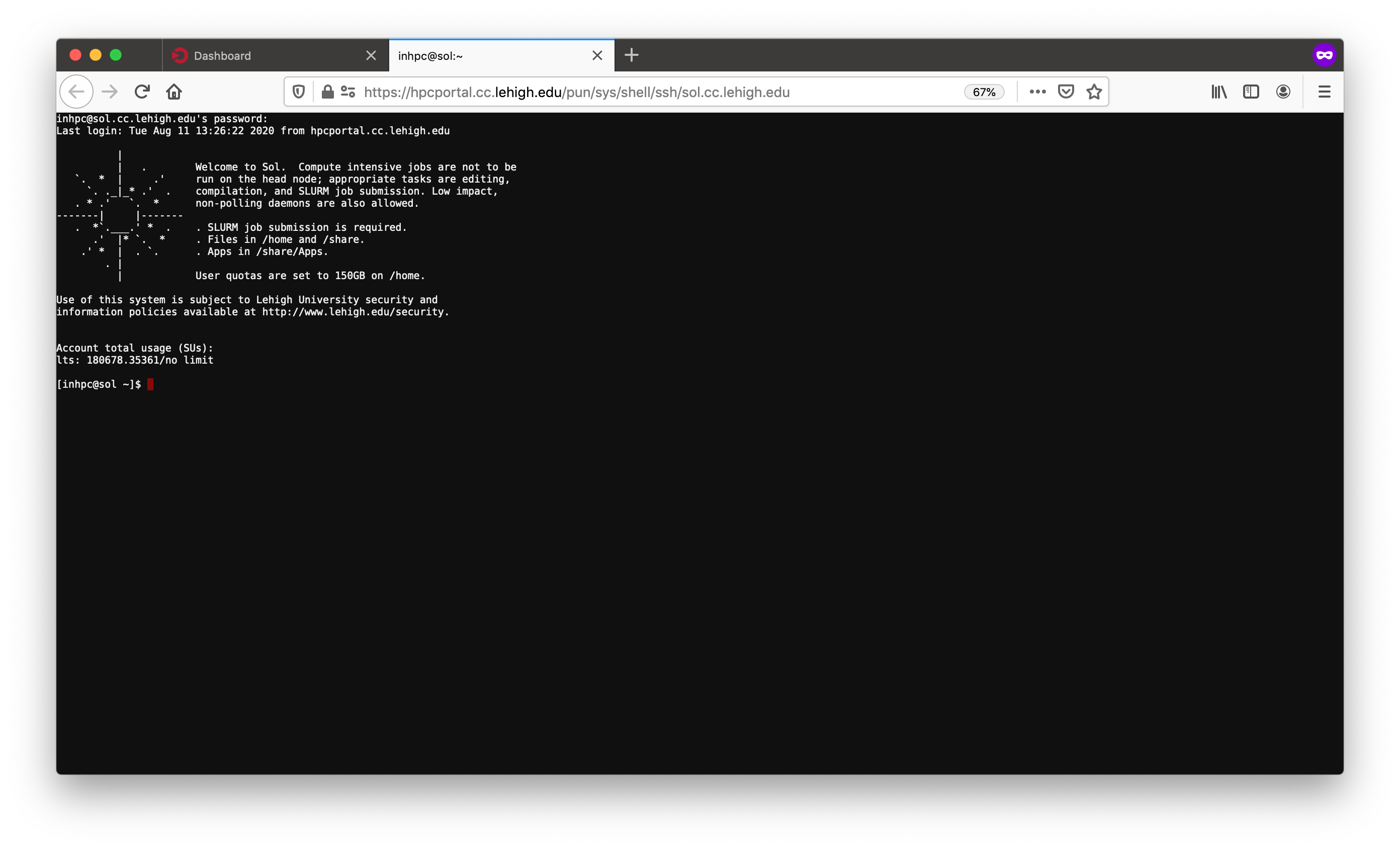

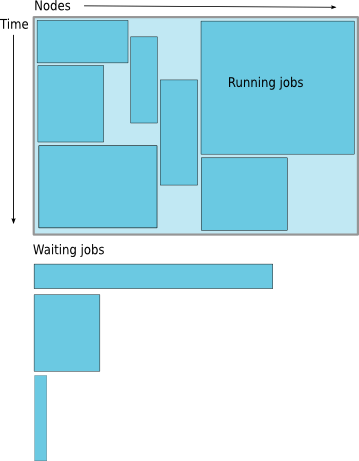

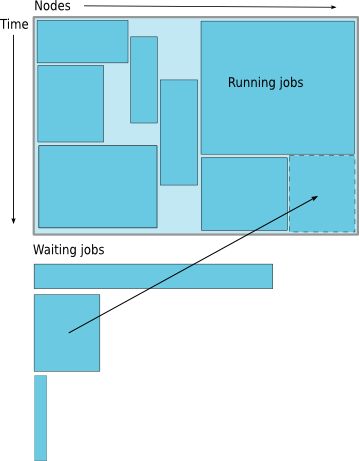

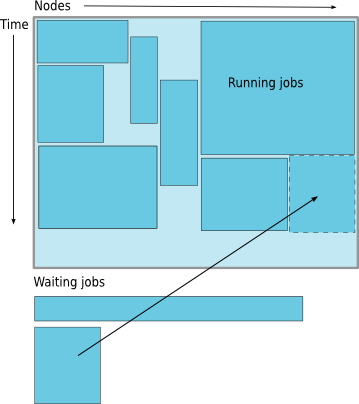

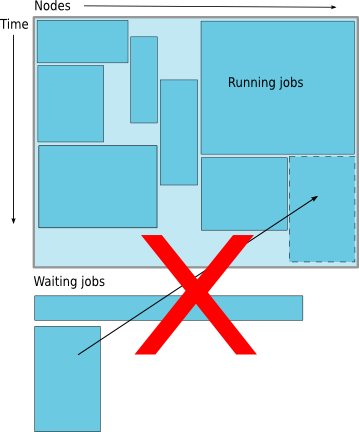

class: center, middle, inverse, title-slide # Using SLURM scheduler on Lehigh’s HPC clusters ## Research Computing ### Library & Technology Services ### <a href="https://researchcomputing.lehigh.edu" class="uri">https://researchcomputing.lehigh.edu</a> --- class: inverse, middle # Research Computing Resources --- # Sol - Lehigh's Shared High Performance Computing Cluster - built by investments from Provost and Faculty. - 9 nodes<sup>a</sup>, dual 10-core Intel Xeon E5-2650 v3 2.3GHz CPU, 128GB RAM. - 33 nodes, dual 12-core Intel Xeon E5-2670 v3 2.3Ghz CPU, 128GB RAM. - 14 nodes, dual 12-core Intel Xeon E5-2650 v4 2.3Ghz CPU, 64GB RAM. - 1 node, dual 8-core Intel Xeon 2630 v3 2.4GHz CPU, 512GB RAM. - 24 nodes, dual 18-core Intel Xeon Gold 6140 2.3GHz CPU, 192GB RAM. - 6 nodes, dual 18-core Intel Xeon Gold 6240 2.6GHz, 192GB RAM. - 72 nVIDIA GTX 1080 & 48 nVIDIA RTX 2080TI GPU cards. - 2 nodes, dual 26-core Intel Xeon Gold 6230R, 2.1GHz, 384GB RAM - 2:1 oversubscribed Infiniband EDR (100Gb/s) interconnect fabric. - 21.97M core hours or service units (SUs) of computing available. - Only 1.40M from Provost investment available Lehigh researchers. <br /> .footnote[ a: 8 nodes invested by Provost available to all Lehigh researchers. ] --- # Condo Investors * Dimitrios Vavylonis, Physics (1 node) * Wonpil Im, Biological Sciences (37 nodes, 98 GPUs) * Anand Jagota, Chemical Engineering (1 node) * Brian Chen, Computer Science & Engineering (3 nodes) * Ed Webb & Alp Oztekin, Mechanical Engineering (6 nodes) * Jeetain Mittal & Srinivas Rangarajan, Chemical Engineering (13 nodes, 16 GPUs) * Seth Richards-Shubik, Economics (1 node) * Ganesh Balasubramanian, Mechanical Engineering (7 nodes) * Department of Industrial & Systems Engineering (2 nodes) * Paolo Bocchini, Civil and Structural Engineering (1 node) * Lisa Fredin, Chemistry (6 nodes) * Hannah Dailey, Mechanical Engineering (1 node) * College of Health (2 nodes) - Condo Investments: 81 nodes, 2348 CPUs, 120 GPUs, 20.57M SUs --- # Hawk * Funded by [NSF Campus Cyberinfrastructure award 2019035](https://www.nsf.gov/awardsearch/showAward?AWD_ID=2019035&HistoricalAwards=false). - PI: Ed Webb (MEM). - co-PIs: Balasubramanian (MEM), Fredin (Chemistry), Pacheco (LTS), and Rangarajan (ChemE). - Sr. Personnel: Anthony (LTS), Reed (Physics), Rickman (MSE), and Takáč (ISE). * Compute - 26 nodes, dual 26-core Intel Xeon Gold 6230R, 2.1GHz, 384GB RAM. - 4 nodes, dual 26-core Intel Xeon Gold 6230R, 1536GB RAM. - 4 nodes, dual 24-core Intel Xeon Gold 5220R, 192GB RAM, 8 nVIDIA Tesla T4. * Storage - 7 nodes, single 16-core AMD EPYC 7302P, 3.0GHz, 128GB RAM, two 240GB SSDs (for OS). - Per node - 3x 1.9TB SATA SSD (for CephFS). - 9x 12TB SATA HDD (for Ceph). * Production: **Feb 1, 2021**. ??? - **Total: 34 nodes, 1752 CPUs, 16.9TB RAM, 32 GPUs, 77TFLOPs, 15.3M SUs** - **Total Storage: 796TB (raw) or 225TB (usable)** - 50% allocated to proposal team, 20% to Open Science Grid and 30% to Lehigh researchers - 40% allocated to proposal team, 35% to Lehigh researchers, 25% to Provost and LTS (R Drive) --- # Configuration <table> <thead> <tr> <th style="text-align:right;"> Nodes </th> <th style="text-align:left;"> Intel Xeon CPU Type </th> <th style="text-align:left;"> CPU Speed (GHz) </th> <th style="text-align:right;"> CPUs </th> <th style="text-align:right;"> GPUs </th> <th style="text-align:right;"> CPU Memory (GB) </th> <th style="text-align:right;"> GPU Memory (GB) </th> <th style="text-align:right;"> CPU TFLOPS </th> <th style="text-align:right;"> GPU TFLOPs </th> <th style="text-align:right;"> SUs </th> </tr> </thead> <tbody> <tr> <td style="text-align:right;"> 9 </td> <td style="text-align:left;"> E5-2650 v3 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 180 </td> <td style="text-align:right;"> 10 </td> <td style="text-align:right;"> 1024 </td> <td style="text-align:right;"> 80 </td> <td style="text-align:right;"> 5.7600 </td> <td style="text-align:right;"> 2.57000 </td> <td style="text-align:right;"> 1576800 </td> </tr> <tr> <td style="text-align:right;"> 33 </td> <td style="text-align:left;"> E5-2670 v3 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 792 </td> <td style="text-align:right;"> 62 </td> <td style="text-align:right;"> 4224 </td> <td style="text-align:right;"> 496 </td> <td style="text-align:right;"> 25.3440 </td> <td style="text-align:right;"> 15.93400 </td> <td style="text-align:right;"> 6937920 </td> </tr> <tr> <td style="text-align:right;"> 14 </td> <td style="text-align:left;"> E5-2650 v4 </td> <td style="text-align:left;"> 2.2 </td> <td style="text-align:right;"> 336 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 896 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 9.6768 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 2943360 </td> </tr> <tr> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> E5-2640 v3 </td> <td style="text-align:left;"> 2.6 </td> <td style="text-align:right;"> 16 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.5632 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 140160 </td> </tr> <tr> <td style="text-align:right;"> 24 </td> <td style="text-align:left;"> Gold 6140 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 864 </td> <td style="text-align:right;"> 48 </td> <td style="text-align:right;"> 4608 </td> <td style="text-align:right;"> 528 </td> <td style="text-align:right;"> 41.4720 </td> <td style="text-align:right;"> 18.39200 </td> <td style="text-align:right;"> 7568640 </td> </tr> <tr> <td style="text-align:right;"> 6 </td> <td style="text-align:left;"> Gold 6240 </td> <td style="text-align:left;"> 2.6 </td> <td style="text-align:right;"> 216 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1152 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 10.3680 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 1892160 </td> </tr> <tr> <td style="text-align:right;"> 2 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 104 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 768 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 4.3264 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 911040 </td> </tr> <tr> <td style="text-align:right;"> 26 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 1352 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 9984 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 56.2432 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 11843520 </td> </tr> <tr> <td style="text-align:right;"> 4 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 208 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 6144 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 8.6528 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 1822080 </td> </tr> <tr> <td style="text-align:right;"> 4 </td> <td style="text-align:left;"> Gold 5220R </td> <td style="text-align:left;"> 2.2 </td> <td style="text-align:right;"> 192 </td> <td style="text-align:right;"> 32 </td> <td style="text-align:right;"> 768 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 4.3008 </td> <td style="text-align:right;"> 8.10816 </td> <td style="text-align:right;"> 1681920 </td> </tr> <tr> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 123 </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 4260 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 152 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 30080 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 1616 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 166.7072 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 45.00416 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 37317600 </td> </tr> </tbody> </table> * SUs available for general use: 4.80M * rest allocated to investors, and grant distribution including education use. <!--- * Total SUs available: 37.32M # LTS Managed Faculty Resources * __Monocacy__: Ben Felzer, Earth & Environmental Sciences. * Eight nodes, dual 8-core Intel Xeon E5-2650v2, 2.6GHz, 64GB RAM. * Theoretical Performance: 2.662TFlops. - __Baltrusaitislab__: Jonas Baltrusaitis, Chemical Engineering. - Three nodes, dual 16-core AMD Opteron 6376, 2.3Ghz, 128GB RAM. - Theoretical Performance: 1.766TFlops. * __Pisces__: Keith Moored, Mechanical Engineering and Mechanics. * Six nodes, dual 10-core Intel Xeon E5-2650v3, 2.3GHz, 64GB RAM, nVIDIA Tesla K80. * Theoretical Performance: 3.840 TFlops (CPU) + 17.46TFlops (GPU). - __Pavo__: decommissioned faculty cluster for development and education. - Twenty nodes, dual 8-core Intel Xeon E5-2650v2, 2.6GHz, 64GB RAM. - Theoretical Performance: 6.656TFlops. # Summary of Computational Resources <table> <thead> <tr> <th style="text-align:left;"> Cluster </th> <th style="text-align:right;"> Cores </th> <th style="text-align:right;"> CPU Memory </th> <th style="text-align:right;"> CPU TFLOPs </th> <th style="text-align:right;"> GPUs </th> <th style="text-align:right;"> CUDA Cores </th> <th style="text-align:right;"> GPU Memory </th> <th style="text-align:right;"> GPU TFLOPS </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Monocacy </td> <td style="text-align:right;"> 128 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 2.662 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.000 </td> </tr> <tr> <td style="text-align:left;"> Pavo<sup>a</sup> </td> <td style="text-align:right;"> 320 </td> <td style="text-align:right;"> 1280 </td> <td style="text-align:right;"> 6.656 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.000 </td> </tr> <tr> <td style="text-align:left;"> Baltrusaitis </td> <td style="text-align:right;"> 96 </td> <td style="text-align:right;"> 384 </td> <td style="text-align:right;"> 1.766 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.000 </td> </tr> <tr> <td style="text-align:left;"> Pisces </td> <td style="text-align:right;"> 120 </td> <td style="text-align:right;"> 384 </td> <td style="text-align:right;"> 3.840 </td> <td style="text-align:right;"> 12 </td> <td style="text-align:right;"> 29952 </td> <td style="text-align:right;"> 144 </td> <td style="text-align:right;"> 17.422 </td> </tr> <tr> <td style="text-align:left;"> Sol </td> <td style="text-align:right;"> 2404 </td> <td style="text-align:right;"> 12544 </td> <td style="text-align:right;"> 93.184 </td> <td style="text-align:right;"> 120 </td> <td style="text-align:right;"> 393216 </td> <td style="text-align:right;"> 1104 </td> <td style="text-align:right;"> 36.130 </td> </tr> <tr> <td style="text-align:left;"> Total </td> <td style="text-align:right;"> 3068 </td> <td style="text-align:right;"> 15104 </td> <td style="text-align:right;"> 108.108 </td> <td style="text-align:right;"> 132 </td> <td style="text-align:right;"> 423168 </td> <td style="text-align:right;"> 1248 </td> <td style="text-align:right;"> 53.552 </td> </tr> </tbody> </table> - Monocacy, Baltrusaitis and Pisces: decommissioning scheduled for Sep 30, 2021. .footnote[ a: 3 nodes available for short simulations or debug runs. ] --> --- # Network Layout Sol, Hawk & Ceph  --- # Accessing Resources * Sol: accessible using ssh while on Lehigh's network. ```bash ssh username@sol.cc.lehigh.edu ``` * Windows PC require a SSH client such as [MobaXterm](https://mobaxterm.mobatek.net/) or [Putty](https://putty.org/). * Mac and Linux PC's, ssh is built in to the terminal application. * Login to the ssh gateway to get on Lehigh's network or connect to VPN first ```bash ssh username@ssh.cc.lehigh.edu ``` and then login to Sol using the above ssh command. * Alternatively, use the following command while off campus. ```bash ssh -J username@ssh.cc.lehigh.edu username@sol.cc.lehigh.edu ``` * [Configure MobaXterm to use the SSH Gateway](https://confluence.cc.lehigh.edu/x/JhH5Bg). --- # SSH Config * Simplify by creating a ssh configuration file `~/.ssh/config` * assign shortname for the host you are connecting to * set username, port or jump host information * use the short hostnames with the `ssh` and `scp` commands .pull-left[ ```bash Host *ssh HostName ssh.cc.lehigh.edu Port 22 User alp514 Host *sol HostName sol.cc.lehigh.edu Port 22 User alp514 Host *sun HostName sol.cc.lehigh.edu Port 22 User alp514 ProxyCommand ssh -W %h:%p ssh ``` ] .pull-right[ <script id="asciicast-THVibyFLRYsmtVsdOMtIdgEm0" src="https://asciinema.org/a/THVibyFLRYsmtVsdOMtIdgEm0.js" async data-rows=10></script> ] --- # Open OnDemand - Open, Interactive HPC via the Web. - Easy to use, plugin-free, web-based access to supercomputers, - File Management, - Command-line shell access, - Job management and monitoring, and - Various Interactive Applications. * NSF-funded project. * SI2-SSE-1534949 and CSSI-Software-Frameworks-1835725, * Developed by [Ohio Supercomputing Center](https://openondemand.org/), * Deployed at dozens of sites (universities, supercomputing centers). - At Lehigh: https://hpcportal.cc.lehigh.edu. - Lehigh IP or VPN required. - More details in Open OnDemand Seminar on March 10. --- # Open OnDemand  --- # Open OnDemand  --- # Available Software * [Commercial, Free and Open source software](https://go.lehigh.edu/hpcsoftware). - Software is managed using module environment. - Why? We may have different versions of same software or software built with different compilers. - Module environment allows you to dynamically change your *nix environment based on software being used. - Standard on many University and national High Performance Computing resource since circa 2011. * How to use HPC Software on your [linux](https://confluence.cc.lehigh.edu/x/ygD5Bg) workstation? --- # Module Command | Command | Description | |:-------:|:-----------:| | <code>module avail</code> | show list of software available on resource | | <code>module load abc</code> | add software <code>abc</code> to your environment (modify your <code>PATH</code>, <code>LD_LIBRARY_PATH</code> etc as needed) | | <code>module unload abc</code> | remove <code>abc</code> from your environment | | <code>module purge</code> | remove all modules from your environment | | <code>module show abc</code> | display what variables are added or modified in your environment | | <code>module help abc</code> | display help message for the module <code>abc</code> | | <code>module spider abc</code> | learn more about package <code>abc</code> | * Users who prefer not to use the module environment will need to modify their .bashrc or .tcshrc files. Run `module show` for list variables that need modified, appended or prepended. <!--- # Module Command <script id="asciicast-hGo9Uvwjh1sYYL4X50ZlqVMMn" src="https://asciinema.org/a/hGo9Uvwjh1sYYL4X50ZlqVMMn.js" async data-rows=20></script> # Compilers * Open Source: GNU Compiler (also called gcc even though gcc is the c compiler) - 8.3.1 (system default), and 9.3.0. * Commercial: Only two seats of each. - Intel Compiler: 19.0.3 and 20.0.3 * Commercial but available free of charge - NVIDIA HPC SDK<sup>a</sup>: 20.9 - Intel OneAPI: 2021.3 * All except gcc 8.3.1 and OneAPI are available via the module environment. .center[ | Language | GNU | Intel | NVIDIA HPC SDK <sup>b</sup> | OneAPI<sup>d</sup> | |:--------:|:----:|:-----:|::-------------:|:--------:| | Fortran | `gfortran` | `ifort` | `nvfortran` | `ifx` | | C | `gcc` | `icc` | `nvc`<sup>c</sup> | `icx` | | C++ | `g++` | `icpc` | `nvc++` | `icpx` | ] .footnote[ a. includes CUDA for compiling on GPUs.<br /> b. NVIDIA HPC SDK replaces the old PGI compilers. `pgfortran`, `pgcc` and `pgc++` are available for now but you should change your commands to the new `nv` commands.<br /> c. `nvcc` is the cuda compiler while `nvc` is the C compiler. <br /> d. `source /share/Apps/intel-oneapi/2021/setvars.sh` to setup environment. ] # Compiling Code * Usage: `<compiler> <options> <source code>` * Common Compiler options or flags: - `-o myexec`: compile code and create an executable `myexec`. If this option is not given, then a default `a.out` is created. - `-l{libname}`: link compiled code to a library called `libname`. e.g. to use lapack libraries, add `-llapack` as a compiler flag. - `-L{directory path}`: directory to search for libraries. e.g. `-L/usr/lib64 -llapack` will search for lapack libraries in `/usr/lib64`. - `-I{directory path}`: directory to search for include files and fortran modules. - `-On`: optmize code to level n where n=0,1,2,3. - `-g`: generate a level of debugging information in the object file (-On supercedes -g). - `-mcmodel=mem_model`: tells the compiler to use a specific memory model to generate code and store data where mem\_model=small, medium or large. - `-fpic/-fPIC`: generate position independent code (PIC) suitable for use in a shared library. * See [HPC Documentation](https://confluence.cc.lehigh.edu/display/hpc/Compilers#Compilers-CompilerFlags) # Compiling and Running Serial Codes <pre class="wrap" style="font-size: 14px"> [2018-02-22 08:47.27] ~/Workshop/2017XSEDEBootCamp/OpenMP [alp514.sol-d118](842): icc -o laplacec laplace_serial.c [2018-02-22 08:47.46] ~/Workshop/2017XSEDEBootCamp/OpenMP [alp514.sol-d118](843): ./laplacec Maximum iterations [100-4000]? 1000 ---------- Iteration number: 100 ------------ [995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67 ---------- Iteration number: 200 ------------ [995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28 ---------- Iteration number: 300 ------------ [995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49 ---------- Iteration number: 400 ------------ [995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59 ---------- Iteration number: 500 ------------ [995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66 ---------- Iteration number: 600 ------------ [995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70 ---------- Iteration number: 700 ------------ [995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73 ---------- Iteration number: 800 ------------ [995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75 ---------- Iteration number: 900 ------------ [995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77 ---------- Iteration number: 1000 ------------ [995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78 Max error at iteration 1000 was 0.034767 Total time was 4.099030 seconds. </pre> # Intel Compiler Options | Flag | Description | |:----|:--------| | `-ipo` | Enables interprocedural optimization between files. | | `-ax_code_/-x_code_` | Tells the compiler to generate multiple, feature-specific auto-dispatch code paths for Intel processors if there is a performance benefit. code can be COMMON-AVX512, CORE-AVX512, CORE-AVX2, CORE-AVX-I or AVX | | `-xHost` | Tells the compiler to generate instructions for the highest instruction set available on the compilation host processor. DO NOT USE THIS OPTION | | `-fast` | Maximizes speed across the entire program. Also sets `-ipo`, `-O3`, `-no-prec-div`, `-static`, `-fp-model fast=2`, and `-xHost`. Not recommended | | `-funroll-all-loops` | Unroll all loops even if the number of iterations is uncertain when the loop is entered. | | `-mkl` | Tells the compiler to link to certain libraries in the Intel Math Kernel Library (Intel MKL) | | `-static-intel/libgcc` | Links to Intel/GNU libgcc libraries statically, use -static to link all libraries dynamically | # GNU Compiler Options | Flag | Description | |:----|:--------| | `-march=processor` | Generate instructions for the machine type processor . processor can be sandybridge, ivybridge, haswell, broadwell or skylake-avx512. | | `-Ofast` | Disregard strict standards compliance. `-Ofast` enables all `-O3` optimizations. It also enables optimizations that are not valid for all standard-compliant programs. It turns on `-ffast-math` and the Fortran-specific `-fstack-arrays`, unless `-fmax-stack-var-size` is specified, and `-fno-protect-parens`. | | `-funroll-all-loops` | Unroll all loops even if the number of iterations is uncertain when the loop is entered. | | `-shared`/`-static` | Links to libraries dynamically/statically | # NVIDIA HPC Compiler Options | Flag | Description | |:----|:--------| | `-acc` | Enable OpenACC directives. | | `-tp processor` | Specify the type(s) of the target processor(s). processor can be sandybridge-64, haswell-64, or skylake-64. | | `-mtune=processor` | Tune to processor everything applicable about the generated code, except for the ABI and the set of available instructions. processor can be sandybridge, ivybridge, haswell, broadwell or skylake-avx512. Some older programs/makefile might use -mcpu that is deprecated | | `-fast` | Generally optimal set of flags. | | `-Mipa` | Invokes interprocedural analysis and optimization. | | `-Munroll` | Controls loop unrolling. | | `-Minfo` | Prints informational messages regarding optimization and code generation to standard output as compilation proceeds.| | `-shared` | Instructs the linker to generate a shared object file. Implies `-fpi`. | | `-Bstatic` | Statically link all libraries, including the PGI runtime. | # Compiling and Running Serial Codes <pre> [2018-02-22 08:47.27] ~/Workshop/2017XSEDEBootCamp/OpenMP [alp514.sol-d118](842): icc -o laplacec laplace_serial.c [2018-02-22 08:47.46] ~/Workshop/2017XSEDEBootCamp/OpenMP [alp514.sol-d118](843): ./laplacec Maximum iterations [100-4000]? 1000 ---------- Iteration number: 100 ------------ [995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67 ---------- Iteration number: 200 ------------ [995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28 ---------- Iteration number: 300 ------------ [995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49 ---------- Iteration number: 400 ------------ [995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59 ---------- Iteration number: 500 ------------ [995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66 ---------- Iteration number: 600 ------------ [995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70 ---------- Iteration number: 700 ------------ [995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73 ---------- Iteration number: 800 ------------ [995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75 ---------- Iteration number: 900 ------------ [995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77 ---------- Iteration number: 1000 ------------ [995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78 Max error at iteration 1000 was 0.034767 Total time was 4.099030 seconds. </pre> # Compilers for OpenMP & TBB * OpenMP support is built-in. | Compiler | OpenMP Flag | TBB Flag | |:---:|:---:|:---:| | GNU | `-fopenmp` | `-L$TBBROOT/lib/intel64_lin/gcc4.4 -ltbb` | | Intel | `-qopenmp` | `-L$TBBROOT/lib/intel64_lin/gcc4.4 -ltbb` | | PGI/nVIDIA HPC SDK | `-mp` | * TBB is available as part of Intel Compiler suite. * `$TBBROOT` depends on the Intel Compiler Suite you want to use. <pre> [alp514.sol](1083): module show intel ------------------------------------------------------------------- /share/Apps/share/Modules/modulefiles/toolchain/intel/16.0.3: module-whatis Set up Intel 16.0.3 compilers. conflict pgi conflict gcc setenv INTEL_LICENSE_FILE /share/Apps/intel/licenses/server.lic setenv IPPROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/ipp setenv MKLROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/mkl setenv TBBROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/tbb ... snip ... </pre> # Compiling and Running OpenMP Codes <pre> [2018-02-22 08:47.56] ~/Workshop/2017XSEDEBootCamp/OpenMP/Solutions [alp514.sol-d118](845): icc -qopenmp -o laplacec laplace_omp.c [2018-02-22 08:48.09] ~/Workshop/2017XSEDEBootCamp/OpenMP/Solutions [alp514.sol-d118](846): OMP_NUM_THREADS=4 ./laplacec Maximum iterations [100-4000]? 1000 ---------- Iteration number: 100 ------------ [995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67 ---------- Iteration number: 200 ------------ [995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28 ---------- Iteration number: 300 ------------ [995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49 ---------- Iteration number: 400 ------------ [995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59 ---------- Iteration number: 500 ------------ [995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66 ---------- Iteration number: 600 ------------ [995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70 ---------- Iteration number: 700 ------------ [995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73 ---------- Iteration number: 800 ------------ [995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75 ---------- Iteration number: 900 ------------ [995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77 ---------- Iteration number: 1000 ------------ [995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78 Max error at iteration 1000 was 0.034767 Total time was 2.459961 seconds. </pre> # Compilers for MPI Programming * MPI is a library, not a compiler, built or compiled for different compilers. | Language | Compile Command | |:--------:|:---:| | Fortran | `mpif90` | | C | `mpicc` | | C++ | `mpicxx` | * Usage: `<compiler> <options> <source code>` <pre> [2017-10-30 08:40.30] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions [alp514.sol](1096): mpif90 -o laplace_f90 laplace_mpi.f90 [2017-10-30 08:40.45] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions [alp514.sol](1097): mpicc -o laplace_c laplace_mpi.c [2017-10-30 08:40.57] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions </pre> * The MPI compiler command is just a wrapper around the underlying compiler. <pre> [alp514.sol](1080): mpif90 -show ifort -fPIC -I/share/Apps/mvapich2/2.1/intel-16.0.3/include -I/share/Apps/mvapich2/2.1/intel-16.0.3/include -L/share/Apps/mvapich2/2.1/intel-16.0.3/lib -lmpifort -Wl, -rpath -Wl,/share/Apps/mvapich2/2.1/intel-16.0.3/lib -Wl, --enable-new-dtags -lmpi </pre> # MPI Libraries * There are two different MPI implementations commonly used. * `MPICH`: Developed by Argonne National Laboratory. - used as a starting point for various commercial and open source MPI libraries - `MVAPICH2`: Developed by D. K. Panda with support for InfiniBand, iWARP, RoCE, and Intel Omni-Path. (default MPI on Sol), - `Intel MPI`: Intel's version of MPI. __You need this for Xeon Phi MICs__, - available in cluster edition of Intel Compiler Suite. Not available at Lehigh. - `IBM MPI` for IBM BlueGene, and - `CRAY MPI` for Cray systems. * `OpenMPI`: A Free, Open Source implementation from merger of three well know MPI implementations. Can be used for commodity network as well as high speed network. - `FT-MPI` from the University of Tennessee, - `LA-MPI` from Los Alamos National Laboratory, - `LAM/MPI` from Indiana University # Running MPI Programs * Every MPI implementation come with their own job launcher: `mpiexec` (MPICH,OpenMPI & MVAPICH2), `mpirun` (OpenMPI) or `mpirun_rsh` (MVAPICH2). * Example: `mpiexec [options] <program name> [program options]` * Required options: number of processes and list of hosts on which to run program. | Option Description | mpiexec | mpirun | mpirun_rsh | |:-----------:|:-------:|:------:|:----------:| | run on `x` cores | -n x | -np x | -n x | | location of the hostfile | -f filename | -machinefile filename | -hostfile filename | * To run a MPI code, you need to use the launcher from the same implementation that was used to compile the code. * For e.g.: You cannot compile code with OpenMPI and run using the MPICH and MVAPICH2's launcher. - Since MVAPICH2 is based on MPICH, you can launch MVAPICH2 compiled code using MPICH's launcher. * SLURM scheduler provides `srun` as a wrapper around all mpi launchers. # Compiling and Running MPI Codes <pre> [2018-02-22 08:48.27] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions [alp514.sol-d118](848): mpicc -o laplacec laplace_mpi.c [2018-02-22 08:48.41] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions [alp514.sol-d118](849): mpiexec -n 4 ./laplacec Maximum iterations [100-4000]? 1000 ---------- Iteration number: 100 ------------ [995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67 ---------- Iteration number: 200 ------------ [995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28 ---------- Iteration number: 300 ------------ [995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49 ---------- Iteration number: 400 ------------ [995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59 ---------- Iteration number: 500 ------------ [995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66 ---------- Iteration number: 600 ------------ [995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70 ---------- Iteration number: 700 ------------ [995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73 ---------- Iteration number: 800 ------------ [995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75 ---------- Iteration number: 900 ------------ [995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77 ---------- Iteration number: 1000 ------------ [995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78 Max error at iteration 1000 was 0.034767 Total time was 1.030180 seconds. </pre> --> --- class: inverse, middle # Scheduler Basics --- # Cluster Environment * A cluster is a group of computers (nodes) that works together closely. .pull-left[ * Two types of nodes: - Head/Login Node, - Compute Node * Multi-user environment. * Each user may have multiple jobs running simultaneously. ] .pull-right[ <img width = '640px' src = 'assets/img/solnetwork.png'> ] --- # How does a cluster look? .pull-left[  ] .pull-right[  ] --- # Scheduler & Resource Management * A software that manages resources (CPU time, memory, etc) and schedules job execution. - Simple Linux Utility for Resource Management (SLURM) - Scheduler - Resource Manager - Allocation Manager * A job can be considered as a user’s request to use a certain amount of resources for a certain amount of time. * The Scheduler or queuing system determines - order jobs are executed, and - which node(s) jobs are executed. --- # How to run jobs * All compute intensive jobs are scheduled. - Write a script to submit jobs to a scheduler. - need to have some background in shell scripting (bash/tcsh). * Have an understanding of * Resources required (which depends on configuration) * number of nodes, * number of processes per node, and * memory required to run your job - Amount of time resources are required? - have an estimate for how long your job will run. - jobs have a max walltime of 2 or 3 days. - can your jobs be restarted from a checkpoint. * Which partition to submit jobs? * SLURM uses the term _partition_ instead of _queue_. --- # Job Scheduling .pull-left[ * Map jobs onto the node-time space. - Assuming CPU time is the only resource. * Need to find a balance between - honoring the order in which jobs are received, and - maximizing resource utilization. ] .pull-right[  ] --- # Backfilling .pull-left[ * A strategy to improve utilization: - Allow a job to jump ahead of others when there are enough idle nodes. - Must not affect the estimated start time of the job with the highest priority. ] .pull-right[ ] --- # How much time must I request * Ask for an amount of time that is - long enough for your job to complete, and - as short as possible to increase the chance of backfilling. .pull-left[  ] -- .pull-right[  ] --- # How does backfilling work?  * [Adaptive Computing](http://docs.adaptivecomputing.com/suite/8-0/basic/help.htm#topics/moabWorkloadManager/topics/optimization/backfill.html%3FTocPath%3DMoab%2520Workload%2520Manager%7COptimizing%2520Scheduling%2520Behavior%2520-%2520Backfill%252C%2520Node%2520Sets%7C_____2) --- # Available Queues on Sol | Partition Name | Max Runtime in hours | Max SU consumed node per hour | |:----------:|:--------------------:|:--------------------:| | lts/lts-gpu | 72 | 19/20 | | im1080/im1080-gpu | 48 | 20/24 | | eng/eng-gpu | 72 | 22/24 | | engc | 72 | 24 | | himem | 72 | 48 | | enge/engi | 72 | 36 | | im2080/im2080-gpu | 48 | 28/36 | | im2080-gpu | 48 | 36 | | chem/health | 48 | 36 | | debug | 1 | 16 | | hawkcpu | 72 | 52 | | hawkmem | 72 | 52 | | hawkgpu | 72 | 48 | | infolab | 72 | 52 | --- # How much memory? * The amount of installed memory less the amount that is used by the operating system and other utilities. * A general rule of thumb on most HPC resources: leave 1-2GB for the OS to run. | Partition | Max Memory/core (GB) | Recommended Memory/Core (GB) | |:---------:|:--------------------:|:----------------------------:| | lts | 6.4 | 6.3 | | eng/im1080/enge/engi/im2080/chem/health | 5.3 | 5.2 | | engc | 2.66 | 2.5 | | himem | 32 | 31.8 | | hawkcpu/infolab | 7.38 | 7.3 | | hawkmem | 29.5 | 29.4 | | hawkgpu | 4.0 | 3.9 | * <code>if you need to run a single core job that requires 10GB memory in the im1080 partition, you need to request 2 cores even though you are only using 1 core.</code> ??? | Partition | Max Memory/core (GB) | Recommended Memory/Core (GB) | |:---------:|:--------------------:|:----------------------------:| | hawk | 7.38 | 7.2 | | hawk-tb | 29.54 | 29 | | hawk-gpu | 3.7 | 3.6 | --- # File Systems - Where to run jobs? * There are three distinct file spaces on Sol. * __HOME__, your home directory on Sol, 150GB quota default. More if PI has purchased a CEPH space. * __SCRATCH__, 500GB scratch storage on the local disk shared by all jobs running on the node. * __CEPHFS__, 22TB global parallel scratch for running jobs with a lifetime of 7 days. - Best Practices - Store all input files, submit scripts, and output files following job completion in HOME - Single node jobs, use SCRATCH to run your jobs and store temporary files - SCRATCH is deleted by the SLURM scheduler when job is complete, so make sure that you copy all required data back to HOME. - SLURM automatically creates `/scratch/${USER}/${SLURM_JOBID}` - All jobs, use CEPHFS. CEPHFS contents are kept for 14 days after your job ends. - SLURM automatically creates <code>/share/ceph/scratch/${USER}/${SLURM_JOBID}</code> --- class: inverse, middle # Job Manager Commands * Submission * Monitoring * Manipulating * Reporting --- # Job Types * Interactive Jobs: - Set up an interactive environment on compute nodes for users. - Will log you into a compute node and wait for your prompt. - Purpose: testing and debugging code. __Do not run jobs on head node!!!__ * All compute node have a naming convention __sol-[a,b,c,d,e]###__ * head node is __sol__. * Batch Jobs: - Executed using a batch script without user intervention. - Advantage: system takes care of running the job. - Disadvantage: cannot change sequence of commands after submission. - Useful for Production runs. - Workflow: write a script -> submit script -> take mini vacation -> analyze results. --- # Useful SLURM Directives | SLURM Directive | Description | |:---------------:|:-----------:| | --partition=queuename | Submit job to the <em>queuename</em> partition | | --time=hh:mm:ss | Request resources to run job for <em>hh</em> hours, <em>mm</em> minutes and <em>ss</em> seconds | | --nodes=m | Request resources to run job on <em>m</em> nodes | | --ntasks-per-node=n | Request resources to run job on <em>n</em> processors on each node requested | | --ntasks=n | Request resources to run job on a total of <em>n</em> processors | | --job-name=jobname | Provide a name, <em>jobname</em> to your job | | --output=filename.out | Write SLURM standard output to file filename.out | | --error=filename.err | Write SLURM standard error to file filename.err | | --mail-type=events | Send an email after job status events is reached. events can be NONE, BEGIN, END, FAIL, REQUEUE, ALL, TIME_LIMIT(_90,80)| | --mail-user=address | Address to send email | | --account=mypi | charge job to the __mypi__ account | | --gres=gpu:# | Specifies number of gpus requested in the gpu partitions, min 1 cpu per gpu | --- # Useful SLURM Directives (contd) * SLURM can also take short hand notation for the directives | Long Form | Short Form | |:---------:|:----------:| | --partition=queuename | -p queuename | | --time=hh:mm:ss | -t hh:mm:ss | | --nodes=m | -N m | | --ntasks=n | -n n | | --account=mypi | -A mypi | | --job-name=jobname | -J jobname | | --output=filename.out | -o filename.out | --- # SLURM Filename Patterns * SLURM allows for a filename pattern to contain one or more replacement symbols, which are a percent sign "%" followed by a letter (e.g. %j). | Pattern | Description | |:-------:|:-----------:| | %A | Job array's master job allocation number | | %a | Job array ID (index) number | | %J | jobid.stepid of the running job (e.g. "128.0") | | %j | jobid of the running job | | %N | short hostname. This will create a separate IO file per node | | %n | Node identifier relative to current job (e.g. "0" is the first node of the running job) This will create a separate IO file per node | | %s | stepid of the running job | | %t | task identifier (rank) relative to current job. This will create a separate IO file per task | | %u | User name | | %x | Job name | --- # Useful SLURM environmental variables * SLURM creates environmental variables that can be used in the submit script. | SLURM Command | Description | |:-------------:|:-----------:| | SLURM_SUBMIT_DIR | Directory where job was submitted | | SLURM_NTASKS | Total number of cores for job | | SLURM_JOB_NUM_NODES | Total number of nodes for job | | SLURM_JOB_NODELIST | List of nodes assigned to your job | | SLURM_JOB_ID or SLURM_JOBID | Job ID number given to this job | | SLURM_JOB_PARTITION | Partition job is running on | | SLURM_JOB_NAME | Name of the job | --- # Job Types: Interactive - Use `srun` command with SLURM Directives followed by `--pty /bin/bash`. * `srun --time=<hh:mm:ss> --nodes=<# of nodes> --ntasks-per-node=<# of core/node> -p <queue name> --pty /bin/bash` * If you have `soltools` module loaded, then use `interact` with at least one SLURM Directive. * `interact -t 20` [Assumes `-p lts -n 1 -N 1`] * Run a job interactively replace `--pty /bin/bash --login` with the appropriate command. * For e.g. `srun -t 1 -n 52 -p hawkcpu $(which lmp) -in in.lj -var x 1 -var n 10000` * Default values are 3 days, 1 node, 1 task and lts partition. --- # Job Types: Batch * Workflow: write a script -> submit script -> take mini vacation -> analyze results. * Batch scripts are written in bash, tcsh, csh or sh. * Add SLURM directives after the shebang line but before any shell commands. <pre> #!/bin/bash #SBATCH --time=1:00:00 #SBATCH --nodes=1 #SBATCH --ntasks-per-node=20 #SBATCH -p lts source /etc/profile.d/zlmod.sh cd ${SLURM_SUBMIT_DIR} </pre> * Submitting Batch Jobs: <pre> sbatch myjob.slr </pre> * `sbatch` can take `#SBATCH DIRECTIVES` as command line arguments. <pre> sbatch --time=1:00:00 --nodes=1 --ntasks-per-node=20 -p lts myjob.slr </pre> --- # Submit script for Serial Jobs <pre> #!/bin/bash #SBATCH --partition=lts #SBATCH --time=1:00:00 #SBATCH --nodes=1 #SBATCH --ntasks-per-node=1 #SBATCH -J myjob source /etc/profile.d/zlmod.sh cd ${SLURM_SUBMIT_DIR} #SBATCH --mail-type=ALL <--- this is a comment not a SLURM DIRECTIVE ./myjob < filename.in > filename.out # Example /share/Apps/examples/simple_jobs/laplace_serial << EOF 400 EOF </pre> --- # Submit script for OpenMP Job <pre> #!/bin/tcsh #SBATCH --partition=im1080 # Directives can be combined on one line #SBATCH --time=1:00:00 --nodes=1 --ntasks-per-node=20 #SBATCH --job-name=myjob source /etc/profile.d/zlmod.csh cd ${SLURM_SUBMIT_DIR} # Use either setenv OMP_NUM_THREADS 20 ./myjob < filename.in > filename.out # OR OMP_NUM_THREADS=20 ./myjob < filename.in > filename.out # Example OMP_NUM_THREADS=4 /share/Apps/examples/simple_jobs/laplace_omp << EOF 400 EOF exit </pre> --- # Submit script for MPI Job <pre> #!/bin/bash #SBATCH --partition=lts #SBATCH --time=1:00:00 #SBATCH --nodes=2 #SBATCH --ntasks-per-node=2 ## For --partition=im1080, ### use --ntasks-per-node=20 ### and --qos=nogpu #SBATCH --job-name=myjob source /etc/profile.d/zlmod.sh module load mvapich2 cd ${SLURM_SUBMIT_DIR} srun ./myjob < filename.in > filename.out # Example srun -n 4 /share/Apps/examples/simple_jobs/laplace_mpi << EOF 400 EOF exit </pre> --- # Submit script for LAMMPS GPU job <pre> #!/bin/bash #SBATCH --partition=hawkgpu # Directives can be combined on one line #SBATCH --time=1:00:00 #SBATCH --nodes=1 # 1 CPU can be be paired with only 1 GPU # 1 GPU can be paired with all 24 CPUs #SBATCH --ntasks-per-node=6 #SBATCH --gres=gpu:1 # Need both GPUs, use --gres=gpu:2 #SBATCH --job-name myjob source /etc/profile.d/zlmod.sh cd ${SLURM_SUBMIT_DIR} # Load LAMMPS Module module load lammps # Run LAMMPS for input file in.lj srun $(which lmp) -in in.lj -sf gpu -pk gpu 1 exit </pre> --- # Need to run multiple jobs * In sequence or serially: * Option 1: Submit jobs as soon as previous jobs complete. * Option 2: Submit jobs with a dependency. * [SLURM](https://confluence.cc.lehigh.edu/x/FqH0BQ#SLURM-SubmittingDependencyjobs): `sbatch --dependency=afterok:<JobID> <Submit Script>` * In parallel, use [GNU Parallel](https://confluence.cc.lehigh.edu/x/B6b0BQ). --- # Monitoring & Manipulating Jobs | SLURM Command | Description | |:-----------:|:-----------:|:-------------:| | squeue | check job status (all jobs) | | squeue -u username | check job status of user <em>username</em> | | squeue --start | Show <strong>estimated</strong> start time of jobs in queue | | scontrol show job jobid | Check status of your job identified by <em>jobid</em> | | scancel jobid | Cancel your job identified by <em>jobid</em> | | scontrol hold jobid | Put your job identified by <em>jobid</em> on hold | | scontrol release jobid | Release the hold that <strong>you put</strong> on <em>jobid</em> | * The following scripts written by RC staff can also be used for monitoring jobs. * __checkq__: `squeue` with additional useful option. * __checkload__: `sinfo` with additional options to show load on compute nodes. - load the `soltools` module to get access to RC staff created scripts. --- # Modifying Resources for Queued Jobs * Modify a job after submission but before starting: * `scontrol update SPECIFICATION jobid=<jobid>` - Examples of `SPECIFICATION`: * add dependency after a job has been submitted: `dependency=<attributes>` * change job name: `jobname=<name>` * change partition: `partition=<name>` * modify requested runtime: `timelimit=<hh:mm:ss>` * request gpus (when changing to one of the gpu partitions): `gres=gpu:<1-4>` * SPECIFICATIONs can be combined * for e.g. command to move a queued job to `im1080` partition and change timelimit to 48 hours for a job 123456 * `scontrol update partition=im1080 timelimit=48:00:00 jobid=123456` --- # Usage Reporting * [sacct](http://slurm.schedmd.com/sacct.html): displays accounting data for all jobs and job steps in the SLURM job accounting log or Slurm database. * [sshare](http://slurm.schedmd.com/sshare.html): Tool for listing the shares of associations to a cluster. * We have created scripts based on these to provide usage reporting: - `alloc_summary.sh` - included in your .bash_profile, - prints allocation usage on your login shell. - `balance` - prints allocation usage summary. --- # Online Usage Reporting * [Monthly usage summary](https://webapps.lehigh.edu/hpc/usage/dashboard.html) (updated daily) - [Scheduler Status](https://webapps.lehigh.edu/hpc/monitor) (updated every 15 mins) * [Current AY Usage Reports](https://webapps.lehigh.edu/hpc/monitor/ay2122.html) (updated daily) * [Current CY Usage Reports](https://webapps.lehigh.edu/hpc/monitor/cy2022.html) (updated daily) - Prior AY Usage Reports * [AY2021](https://webapps.lehigh.edu/hpc/monitor/ay2021.html) * [AY1920](https://webapps.lehigh.edu/hpc/monitor/ay1920.html) * [AY1819](https://webapps.lehigh.edu/hpc/monitor/ay1819.html) * [AY1718](https://webapps.lehigh.edu/hpc/monitor/ay1718.html) * [AY1617](https://webapps.lehigh.edu/hpc/monitor/ay1617.html) * <span class="alert">Usage reports restricted to Lehigh IPs</span> --- # Additional Help & Information * Issue with running jobs or need help to get started: <https://lts.lehigh.edu/help> - More Information * [Research Computing](https://go.lehigh.edu/rcwiki) * Training * [Seminars](https://go.lehigh.edu/hpcseminars) * [Workshops](https://go.lehigh.edu/hpcworkshops) * Subscribe * HPC Training Google Groups: <mailto:hpctraining-list+subscribe@lehigh.edu> * Research Computing Mailing List: <https://lists.lehigh.edu/mailman/listinfo/hpc-l> - My contact info * eMail: <alp514@lehigh.edu> * Tel: (610) 758-6735 * Location: Room 296, EWFM Computing Center * [Schedule](https://www.google.com/calendar/embed?src=alp514%40lehigh.edu&ctz=America/New_York ) --- class: inverse middle center # Thank You! # Questions? <!-- <br /> <br /> # Next Week: Open OnDemand ### Jupyter Notebooks, RStudio, MATLAB, Mathematica, SAS, Virtual Desktops and more -->