Who?

- Unit of Lehigh's Library & Technology Services within the Center for Innovation in Teaching & Learning

Our Mission

- We enable Lehigh Faculty, Researchers and Scholars achieve their goals by providing various computational resources; hardware, software, and storage; consulting and training.

Research Computing Staff

- Steve Anthony, System Administrator

- Dan Brashler, CAS Senior Computing Consultant

- Sachin Joshi, Data Analyst & Visualization Specialist

- Alex Pacheco, Manager & XSEDE Campus Champion

Introduction to Linux & HPC

Research Computing

Library & Technology Services

https://researchcomputing.lehigh.edu

About Us?

Background and Defintions

- Computational Science and Engineering

- Gain understanding, mainly through the analysis of mathematical models implemented on computers.

- Construct mathematical models and quantitative analysis techniques, using computers to analyze and solve scientific problems.

- Typically, these models require large amount of floating-point calculations not possible on desktops and laptops.

- The field's growth drove the need for HPC and benefited from it.

- HPC

- High Performance Computing (HPC) is computation at the forefront of modern technology, often done on a supercomputer.

- Supercomputer

- A supercomputer is a computer at the frontline of current processing capacity, particularly speed of calculation.

Why use HPC?

- HPC may be the only way to achieve computational goals in a given amount of time

- Size: Many problems that are interesting to scientists and engineers cannot fit on a PC usually because they need more than a few GB of RAM, or more than a few hundred GB of disk.

- Speed: Many problems that are interesting to scientists and engineers would take a very long time to run on a PC: months or even years; but a problem that would take a month on a PC might only take a few hours on a supercomputer

Parallel Computing

- many calculations are carried out simultaneously

- based on principle that large problems can often be divided into smaller ones, which are then solved in parallel

- Parallel computers can be roughly classified according to the level at which the hardware supports parallelism.

- Multicore computing

- Symmetric multiprocessing

- Distributed computing

- Grid computing

- General-purpose computing on graphics processing units (GPGPU)

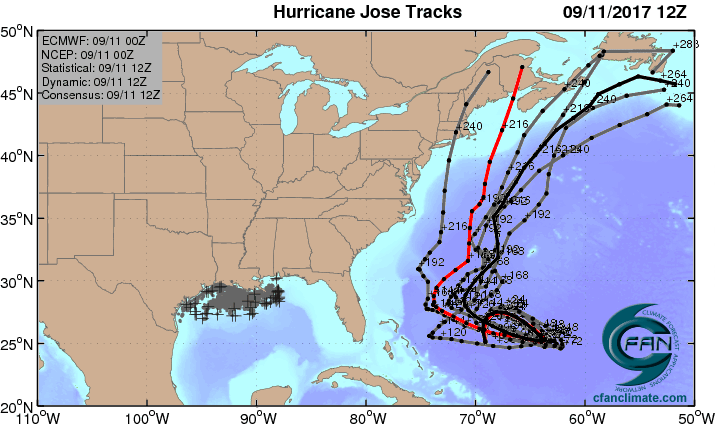

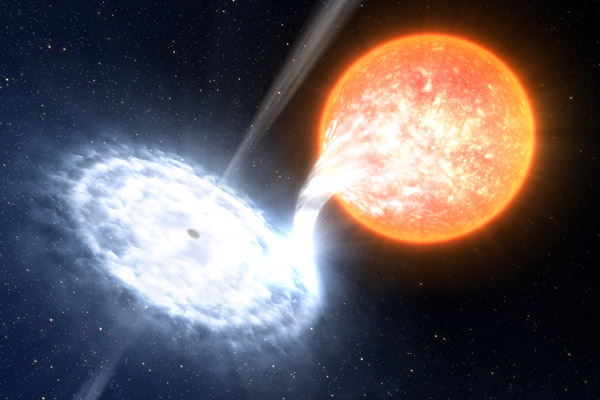

What does HPC do?

- Simulation of Physical Phenomena

- Storm Surge Prediction

- Black Holes Colliding

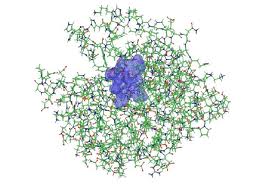

- Molecular Dynamics

- Data analysis and Mining

- Bioinformatics

- Signal Processing

- Fraud detection

- Visualization

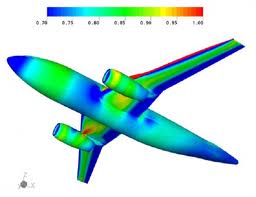

- Design

- Supersonic ballute

- Boeing 787 design

- Drug Discovery

- Oil Exploration and Production

- Automotive Design

- Art and Entertainment

HPC by Disciplines

- Traditional Disciplines

- Science: Physics, Chemistry, Biology, Material Science

- Engineering: Mechanical, Structural, Civil, Environmental, Chemical

- Non Traditional Disciplines

- Finance

- Preditive Analytics

- Trading

- Humanities

- Culturomics or cultural analytics: study human behavior and cultural trends through quantitative analysis of digitized texts, images and videos.

- Finance

What is Linux?

- Linux is an operating system that evolved from a kernel created by Linus Torvalds when he was a student at the University of Helsinki.

- It’s meant to be used as an alternative to other operating systems, Windows, Mac OS, MS-DOS, Solaris and others.

- Linux is the most popular OS used in a Supercomputer

- From June 2017 Top 500 List

| OS Family | Count | Share % |

|---|---|---|

| Linux | 498 | 99.6 |

| Unix | 2 | .4 |

- If you are using a Supercomputer/High Performance Computer for your research, it will be based on a *nix OS.

- It is required/neccessary/mandatory to learn Linux Programming (commands, shell scripting) if your research involves use of High Performance Computing or Supercomputing resources.

Difference between Shell and Command

- What is a Shell?

- The command line interface is the primary interface to Linux/Unix operating systems.

- Shells are how command-line interfaces are implemented in Linux/Unix.

- Each shell has varying capabilities and features and the user should choose the shell that best suits their needs.

- The shell is simply an application running on top of the kernel and provides a powerful interface to the system.

- What is a command and how do you use it?

- command is a directive to a computer program acting as an interpreter of some kind, in order to perform a specific task.

- command prompt (or just prompt) is a sequence of (one or more) characters used in a command-line interface to indicate readiness to accept commands.

- Its intent is to literally prompt the user to take action.

- A prompt usually ends with one of the characters $, %, #, :, > and often includes other information, such as the path of the current working directory.

Types of Shell

- sh : Bourne Shell

- Developed by Stephen Bourne at AT&T Bell Labs

- csh : C Shell

- Developed by Bill Joy at University of California, Berkeley

- ksh : Korn Shell

- Developed by David Korn at AT&T Bell Labs

- backward-compatible with the Bourne shell and includes many features of the C shell

- bash : Bourne Again Shell

- Developed by Brian Fox for the GNU Project as a free software replacement for the Bourne shell (sh).

- Default Shell on Linux and Mac OSX

- tcsh : TENEX C Shell

- Developed by Ken Greer at Carnegie Mellon University

- It is essentially the C shell with programmable command line completion, command-line editing, and a few other features.

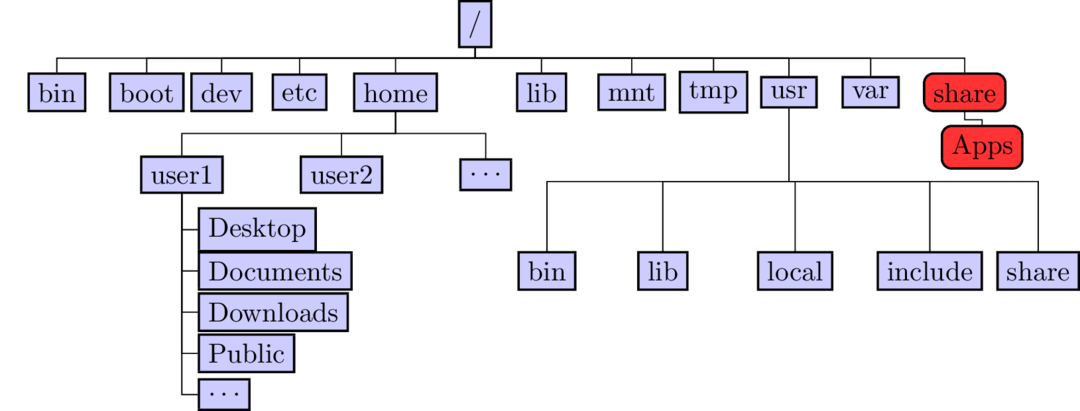

Directory Structure

- All files are arranged in a hierarchial structure, like an inverted tree.

- The top of the hierarchy is traditionally called root (written as a slash / )

Relative & Absolute Path

- Path means a position in the directory tree.

- You can use either the relative path or absolute path

- In relative path expression

- (one dot or period) is the current working directory

- (two dots or periods) is one directory up

- You can combine . and .. to navigate the filee system hierarchy.

- the path is not defined uniquely and does depend on the current path.

- is unique only if your current working directory is your home directory.

- In absolute path expression

- the path is defined uniquely and does not depend on the current path

- /tmp is unique since /tmp is the abolute path

Variables

- Linux permits the use of variables, similar to any programming language such as C,C++, Fortran etc

- A variable is a named object that contains data used by one or more applications.

- There are two types of variables, Environment and User Defined and can contain anumber, character or a string of characters.

- Environment Variables provides a simple way to share configuration settings between multiple applications and processes in Linux.

- By Convention, enviromental variables are often named using all uppercase letters.

PATH,LD_LIBRARY_PATH,LD_INCLUDE_PATH,TEXINPUTS, etc

- To reference a variable (environment or user defined) prepend

$to the name of the variable - Its a good practice to protect your variable name within

{...}such as${PATH}when referencing it. (We’ll see an example in a few slides)$PATH,${LD_LIBRARY_PATH}

- The command

printenvwill list the current environmental variables.

Variables (contd)

- Rules for Variable Names

- Variable names must start with a letter or underscore

- Number can be used anywhere else

- DO NOT USE special characters such as @, #, %, $

- Case sensitive

- Examples

- Allowed:

VARIABLE,VAR1234able,var name,VAR - Not Allowed:

1VARIABLE,%NAME,$myvar,VAR@NAME

- Allowed:

- Assigning value to a variable

- Shell:

myvar=somevalue - Environment:

export name=value

- Shell:

- You can convert any Shell variable to Environment variable using the

exportcommand- Convert to Environment:

export myvar

- Convert to Environment:

Linux Commands

manshows the manual for a command or program.man pwd

pwd: print working directory, gives the absolute path of your current location in the directory hierarchycd dirname: change to folder calleddirname- If you omit directory name, you will end up in your home directory

- Enter

cd

mkdir dirname: create a directory calleddirname- Create a directory, any name you want and cd to that directory

- I would enter the command

mkdir ise407followed bycd ise407 - Use the

pwdcommand to check your current location

Linux Command (contd)

cp file1 file2: command to copy file1 to file2- You can use absolute or relative path for the source and destination

cp ../file . - If you need to copy over a directory and its contents, add a

-r flag cp -r /home/alp514/sum2017 .

- You can use absolute or relative path for the source and destination

rm file1: delete a file called file1- Unlike other OS's, once you delete a file it cannot be deleted.

- Use

-rflag to delete directories recursively - Use

-ifor prompt before deletion

ls: list contents of current directory- If you provide a directory path as an argument, then the contents of that directory will be listed

echo: prints whatever follows to the screenecho $HOME: prints the contents of the variable HOME i.e. your home directory to the screen

alias: create a shortcut to another command or name to execute a long string.alias rm="/bin/rm -i"

File Editing

- The two most commonly used editors on Linux/Unix systems are:

- vi or vim (vi improved)

- emacs

- vi/vim is installed by default on Linux/Unix systems and has only a command line interface (CLI).

- emacs has both a CLI and a graphical user interface (GUI).

- Other editors that you may come across on *nix systems

- kate: default editor for KDE.

- gedit: default text editor for GNOME desktop environment.

- gvim: GUI version of vim

- pico: console based plain text editor

- nano: GNU.org clone of pico

- kwrite: editor by KDE.

vi/emacs commands

| Inserting/Appending Text | vi Command |

|---|---|

| insert at cursor | i |

| insert at beginning of line | I |

| append after cursor | a |

| append at end of line | A |

| newline after cursor in insert mode | o |

| newline before cursor in insert mode | O |

| append at end of line | ea |

| exit insert mode | ESC |

- C: Control Key

- ESC: Escape Key

- M: Meta Key, usually mapped to ESC

| Cursor Movement | vi Command | emacs Command |

|---|---|---|

| move left | h | C-b |

| move down | j | C-n |

| move up | k | C-p |

| move right | l | C-f |

| jump to beginning of line | ^ | C-a |

| jump to end of line | $ | C-e |

| goto line n | nG | M-x goto-line n |

| goto top of file | 1G | M-< |

| goto end of file | G | M-> |

| move one page up | C-u | M-v |

| move one page down | C-d | C-v |

vi/emacs commands

| File Manipulation | vi Command | emacs Command |

|---|---|---|

| save file | :w | C-x C-s |

| save file and exit | :wq | |

| quit | :q | C-x C-c |

| quit without saving | :q! | |

| delete a line | dd | C-a C-k |

| delete n lines | ndd | C-a M-n C-k |

| paste deleted line after cursor | p | C-y |

| paste before cursor | P | |

| undo edit | u | C-_ or C-x u |

| delete from cursor to end of line | D | C-k |

| File Manipulation | vi Command | emacs Command |

|---|---|---|

| replace a character | r | |

| join next line to current | J | |

| change a line | cc | |

| change a word | cw | |

| change to end of line | c$ | |

| delete a character | x | C-d |

| delete a word | dw | M-d |

| edit/open file | :e file | C-x C-f file |

| insert file | :r file | C-x i file |

File Permission

- In *NIX OS’s, you have three types of file permissions

- read (r)

- write (w)

- execute (x)

- for three types of users

- user

- group

- world i.e. everyone else who has access to the system

\[ d\underbrace{rwx}_{u}\overbrace{r-x}^g\underbrace{r-x}_o \]

## -rw-r--r-- 1 apacheco staff 53423 Feb 13 2018 Rplot.jpeg

## drwxr-xr-x 8 apacheco staff 256 Feb 26 2017 USmap

## drwxr-xr-x 7 apacheco staff 224 Feb 26 2017 assets

- The first character signifies type of file

- d: directory

- -: regular file

- l: symbolic link

- The next three characters of first triad signifies what the owner can do

- The second triad signifies what group member can do

- The third triad signifies what everyone else can do

File Permission

- Read carries a weight of 4

- Write carries a weight of 2

- Execute carries a weight of 1

- The weights are added to give a value of 7 (rwx), 6(rw), 5(rx) or 3(wx) permissions.

chmodis a *NIX command to change permissions on a file- To give user rwx, group rx and world x permission, the command is

-

chmod 751 filename

-

- To only give only user rwx, the command is

chmod 700 filename

- Instead of using numerical permissions you can also use symbolic mode

- u/g/o or a user/group/world or all i.e. ugo

- +/- Add/remove permission

- r/w/x read/write/execute

- Give everyone execute permission:

chmod a+x hello.shchmod ugo+x hello.sh

- Remove group and world read & write permission:

chmod go-rw hello.sh

Scripting Language

- A scripting language or script language is a programming language that supports the writing of scripts.

- Scripting Languages provide a higher level of abstraction than standard programming languages.

- Compared to programming languages, scripting languages do not distinguish between data types: integers, real values, strings, etc.

- Scripting Languages tend to be good for automating the execution of other programs.

- analyzing data

- running daily backups

- They are also good for writing a program that is going to be used only once and then discarded.

- A script is a program written for a software environment that automate the execution of tasks which could alternatively be executed one-by-one by a human operator.

- The majority of script programs are “quick and dirty”, where the main goal is to get the program written quickly.

Writing your first script

- Write a script

- A shell script is a file that contains ASCII text.

- Create a file, hello.sh with the following lines

#!/bin/bash

# My First Script

echo "Hello World!"

- Set permissions

chmod +x hello.sh

- Execute the script

./hello.sh

## Hello World !

Breaking down the script

- My First Script

## #!/bin/bash

## # My First Script

## echo "Hello World!"

- The first line is called the ”ShaBang” line. It tells the OS which interpreter to use.

- Other options are:

- sh :

#!/bin/sh - ksh :

#!/bin/ksh - csh :

#!/bin/csh - tcsh:

#!/bin/tcsh

- sh :

- The second line is a comment. All comments begin with

#. - The third line tells the OS to print ”Hello World!” to the screen.

- Slides written in R Markdown. ## in orange implies screen output

Quotation

- Double Quotation " "

- Enclosed string is expanded

- Single Quotation ' '

- Enclosed string is read literally

- Back Quotation ` `

- Used for command substitution

- Enclosed string is executed as a command

- In bash, you can also use $( ) instead of ` `

myvar=hello

myname=Alex

echo "$myvar $myname"

echo '$myvar $myname'

echo $(pwd)

## hello Alex

## $myvar $myname

## /Users/apacheco/Tutorials/DCVS/bitbucket/lurc

Arithmetic Operations

| Operation | Operator | Example |

|---|---|---|

| Addition | + | $((1+2)) |

| Subtraction | - | let c=$a-$b |

| Multiplication | * | $[$a*$b] |

| Division | / | `expr $a/$b` |

| Exponentiation | ** | $[2**3] |

| Modulo | % | let c%=5 |

- You can use C-style increment operators (

let c++) - Only integer arithmetic works in bash

- Use

bcorawkfor floating point arithmeticecho "3.8 + 4.2" | bcecho "scale=5; 2/5" | bcbc <<< "scale=5; 2/5"echo 3.8 4.2 | awk '{print $1*$2}'

Declare command

- Use the declare command to set variable and functions attributes.

- Create a constant variable i.e. read only variable

declare -r vardeclare -r varName=value

- Create an integer variable

declare -i vardeclare -i varName=value

- You can carry out arithmetic operations on variables declared as integers

j=10/5 ; echo $j

## 10/5

declare -i j ; j=10/5 ; echo $j

## 2

Flow Control

- Shell Scripting Languages execute commands in sequence similar to programming languages such as C, Fortran, etc.

- Control constructs can change the sequential order of commands.

- Conditionals:

if - Loops:

for,while,until

- Conditionals:

Conditionals: if

- An

if ... thenconstruct tests whether the exit status of a condition is 0, and if so, executes one or more commands.

if [ condition1 ]

then

some commands

fi

- if the exit status of a condition is non-zero,

bashallows aif ... then ... elseconstruct

if [ condition1 ]; then

some commands

else

some commands

fi

- You can also add another if statement i.e. else if or

elifif you want to check another conditional

if [ condition1 ]; then

some commands

elif [ condition2 ]; then

some commands

else

some commands

fi

Comparison operators

- integers and strings

| Operation | Integer | String |

|---|---|---|

| equal to | if [ 1 -eq 2 ] |

if [ $a == $b ] |

| not equal to | if [ $a -ne $b ] |

if [ $a != $b ] |

| greater than | if [ $a -gt $b ] |

|

| greater than or equal to | if [ 1 -ge $b ] |

|

| less than | if [ $a -lt 2 ] |

|

| less than or equal to | if [ $a -le $b ] |

|

| zero length or null | if [ -z $a ] |

|

| non zero length | if [ -n $a ] |

Other Operators

- File Test Operators

| Operation | Example |

|---|---|

| file exists | if [ -e .bashrc ] |

| file is a regular file | if [ -f .bashrc ] |

| file is a directory | if [ -d /home ] |

| file is not zero size | if [ -s .bashrc ] |

| file has read permission | if [ -r .bashrc ] |

| file has write permission | if [ -w .bashrc ] |

| file has execute permission | if [ -x .bashrc ] |

- Logical Operators

| Operation | Example |

|---|---|

| NOT | if [ ! -e .bashrc ] |

| AND | if [ $a -eq 2 ] && [ $x -gt $y ] |

| OR | if [[ $a -gt 0 \(\mid\mid\) $x -lt 5 ]] |

Nested ifs

ifstatements can be nested and/or simplied using logical operators

if [ $a -gt 0 ]; then

if [ $a -lt 5 ]; then

echo "The value of a lies somewhere between 0 and 5"

fi

fi

if [[ $a -gt 0 && $a -lt 5 ]]

echo "The value of a lies somewhere between 0 and 5"

fi

if [[ $a -gt 0 || $x -lt 5 ]]

echo "a is positive or x is less than 5"

fi

Loops

- A loop is a block of code that iterates a list of commands.

foris the basic loop construct

for arg in list

do

some commands

done

while(until) tests for a condition at the top of a loop, and keeps looping as long as that condition is true (false)

while [ condition ]; do

some commands

done

until [ condition ]; do

some commands

done

Loop Example

#!/bin/bash

echo -n " Enter a number less than 10: "

read counter

factorial=1

for i in $(seq 1 $counter); do

let factorial*=$i

done

echo $factorial

#!/bin/bash

echo -n " Enter a number less than 10: "

read counter

factorial=1

while [ $counter -gt 0 ]; do

factorial=$(( $factorial * $counter ))

counter=$(( $counter - 1 ))

done

echo $factorial

#!/bin/bash

echo -n " Enter a number less than 10: "

read counter

factorial=1

until [ $counter -le 1 ]; do

factorial=$[ $factorial * $counter ]

let counter-=1

done

echo $factorial

bash factorial.sh << EOF

10

EOF

## Enter a number less than 10:3628800

bash factorial1.sh << EOF

10

EOF

## Enter a number less than 10: 3628800

bash factorial2.sh << EOF

10

EOF

## Enter a number less than 10: 3628800

Arrays

- Array elements may be initialized with the variable[xx] notation

variable[xx]=1 - Initialize an array during declaration

name=(firstname 'last name') - reference an element i of an array name

${name[i]} - print the whole array

${name[@]} - print length of array

${#name[@]} - print length of element i of array name

${#name[i]} ${#name}prints the length of the first element of the array- Add an element to an existing array

name=(title ${name[@]}) - copy an array name to an array user

user=(${name[@]}) - concatenate two arrays

nameuser=(${name[@]} ${user[@]}) - delete an entire array

unset name - remove an element i from an array

unset name[i] - the first array index is zero (0)

Command Line Arguments

- Similar to programming languages, bash (and other shell scripting languages) can also take command line arguments

./scriptname arg1 arg2 arg3 arg4 ...$0,$1,$2,$3, etc: positional parameters corresponding to./scriptname,arg1,arg2,arg3,arg4,...respectively- for more than 9 arguments, position parameters need to be protected for e.g.

${10}OR - use

shift N: shift positional parameters fromN+1to$#are renamed to variable names from$1to$# - N + 1 $#: number of command line arguments$*: all of the positional parameters, seen as a single word$@: same as$*but each parameter is a quoted string.

Example - Command Line Arguments

#!/bin/bash

USAGE="USAGE: $0 <at least 1 argument>"

if [[ "$#" -lt 1 ]]; then

echo $USAGE

exit

fi

echo "Number of Arguments: " $#

echo "List of Arguments: " $@

echo "Name of script that you are running: " $0

echo "Command You Entered:" $0 $*

while [ "$#" -gt 0 ]; do

echo "Argument List is: " $@

echo "Number of Arguments: " $#

shift

done

sh ./shift.sh $(seq 1 3)

## Number of Arguments: 3

## List of Arguments: 1 2 3

## Name of script that you are running: ./shift.sh

## Command You Entered: ./shift.sh 1 2 3

## Argument List is: 1 2 3

## Number of Arguments: 3

## Argument List is: 2 3

## Number of Arguments: 2

## Argument List is: 3

## Number of Arguments: 1

Functions

- Like ”real” programming languages, bash has functions.

- A function is a subroutine, a code block that implements a set of operations, a ”black box” that performs a specified task.

- Wherever there is repetitive code, when a task repeats with only slight variations in procedure, then consider using a function.

function function_name {

somecommands

}

OR

function_name () {

some commands

}

Example Function

#!/bin/bash

usage () {

echo "USAGE: $0 [atleast 11 arguments]"

exit

}

[[ "$#" -lt 11 ]] && usage

echo "Number of Arguments: " $#

echo "List of Arguments: " $@

echo "Name of script that you are running: " $0

echo "Command You Entered:" $0 $*

echo "First Argument" $1

echo "Tenth and Eleventh argument" $10 $11 ${10} ${11}

echo "Argument List is: " $@

echo "Number of Arguments: " $#

shift 9

echo "Argument List is: " $@

echo "Number of Arguments: " $#

sh ./shift10.sh $(seq 1 10)

## USAGE: ./shift10.sh [atleast 11 arguments]

sh ./shift10.sh $(seq 0 2 30)

## Number of Arguments: 16

## List of Arguments: 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30

## Name of script that you are running: ./shift10.sh

## Command You Entered: ./shift10.sh 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30

## First Argument 0

## Tenth and Eleventh argument 00 01 18 20

## Argument List is: 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30

## Number of Arguments: 16

## Argument List is: 18 20 22 24 26 28 30

## Number of Arguments: 7

Function Arguments

- You can also pass arguments to a function.

- All function parameters or arguments can be accessed via

$1, $2, $3,..., $N. $0always point to the shell script name.$*or$@holds all parameters or arguments passed to the function.$#holds the number of positional parameters passed to the function.- Array variable called

FUNCNAMEcontains the names of all shell functions currently in the execution call stack. - By default all variables are global.

- Modifying a variable in a function changes it in the whole script.

- You can create a local variables using the local command

local var=value

local varName

Recursive Function

- A function may recursively call itself.

#!/bin/bash

usage () { echo "USAGE: $0 <integer>" ; exit }

factorial() {

local i=$1 ; local f

declare -i i ; declare -i f

if [[ "$i" -le 2 && "$i" -ne 0 ]]; then

echo $i

elif [[ "$i" -eq 0 ]]; then

echo 1

else

f=$(( $i - 1 ))

f=$( factorial $f )

f=$(( $f * $i ))

echo $f

fi

}

if [[ "$#" -eq 0 ]]; then

usage

else

for i in $@ ; do

x=$( factorial $i )

echo "Factorial of $i is $x"

done

fi

sh ./factorial3.sh $(seq 1 2 18)

## Factorial of 1 is 1

## Factorial of 3 is 6

## Factorial of 5 is 120

## Factorial of 7 is 5040

## Factorial of 9 is 362880

## Factorial of 11 is 39916800

## Factorial of 13 is 6227020800

## Factorial of 15 is 1307674368000

## Factorial of 17 is 355687428096000

Research Computing Resources

Maia

- 32-core Symmetric Multiprocessor (SMP) system available to all Lehigh Faculty, Staff and Students

- dual 16-core AMD Opteron 6380 2.5GHz CPU

- 128GB RAM and 4TB HDD

- Theoretical Performance: 640 GFLOPs (640 billion floating point operations per second)

- Access: Batch Scheduled, no interactive access to Maia

\[ GFLOPs = cores \times clock \times \frac{FLOPs}{cycle} \]

FLOPs for various AMD & Intel CPU generation

Currently out of warranty.Will be permanently shut down in case of hardware failureNo plans to replace Maia

Research Computing Resources

- Sol: 80 node Shared Condominium Cluster

- 9 nodes, dual 10-core Intel Xeon E5-2650 v3 2.3GHz CPU, 25MB Cache, 128GB RAM

- 33 nodes, dual 12-core Intel Xeon E5-2670 v3 2.3Ghz CPU, 30 MB Cache, 128GB RAM

- 14 nodes, dual 12-core Intel Xeon E5-2650 v4 2.3Ghz CPU, 30 MB Cache, 64GB RAM

- 1 node, dual 8-core Intel Xeon 2630 v3 2.4GHz CPU, 20 MB Cache, 512GB RAM

- 23 nodes, dual 18-core Intel Xeon Gold 6140 2.3GHz CPU, 24.7 MB Cache, 192GB RAM

- 66 nVIDIA GTX 1080 GPU cards

- 48 nVIDIA RTX 2080 GPU cards

- 1TB HDD per node

- 2:1 oversubscribed Infiniband EDR (100Gb/s) interconnect fabric

- Theoretical Performance: 80.3968 TFLOPs (CPU) + 34.5876 TFLOPs (GPU)

- Access: Batch Scheduled, interactive on login node for compiling, editing only

Sol

| Processor | Partition | Nodes | CPUs | GPUs | CPU Memory (GB) | GPU Memory (GB) | CPU TFLOPs | GPU TFLOPs | Annual SUs |

|---|---|---|---|---|---|---|---|---|---|

| E5-2650v3 | lts | 9 | 180 | 4 | 1152 | 32 | 5.76 | 1.028 | 1,576,800 |

| E5-2670v3 | imlab, eng | 33 | 792 | 62 | 4224 | 496 | 25.344 | 15.934 | 6,937,920 |

| E5-2650v4 | engc | 14 | 336 | 896 | 9.6768 | 2,943,360 | |||

| E5-2640v3 | himem | 1 | 16 | 512 | 0.5632 | 140,160 | |||

| Gold 6140 | enge, engi, unnamed | 23 | 828 | 48 | 4416 | 528 | 39.744 | 17.626 | 7,253,280 |

| 80 | 2152 | 114 | 11200 | 1056 | 81.088 | 34.588 | 18,851,520 |

- Haswell (v3) and Broadwell (v4) provide 256-bit Advanced Vector Extensions SIMD instructions

- double the base FLOPs at expense of CPU Speed

- Skylake (Gold) provide 512-bit Advanced Vector Extensions SIMD instructions

- quadruple the base FLOPs at expense of CPU Speed

What about Storage resources

- LTS provides various storage options for research and teaching

- Some are cloud based and subject to Lehigh's Cloud Policy

- For research, LTS is deploying a 600TB refreshed Ceph storage system

- Ceph is based on the Ceph software

- Research groups can purchase a sharable project space on Ceph @ $375/TB for 5 year duration

- Ceph is in-house, built, operated and administered by LTS Research Computing Staff.

- located in Data Center in EWFM with a backup cluster in Packard Lab

- HPC users can write job output directly to their Ceph volume

- Ceph volume can be mounted as a network drive on Windows or CIFS on Mac and Linux

- See Ceph FAQ for more details

- Storage quota on

- Maia: 5GB

- Sol: 150GB

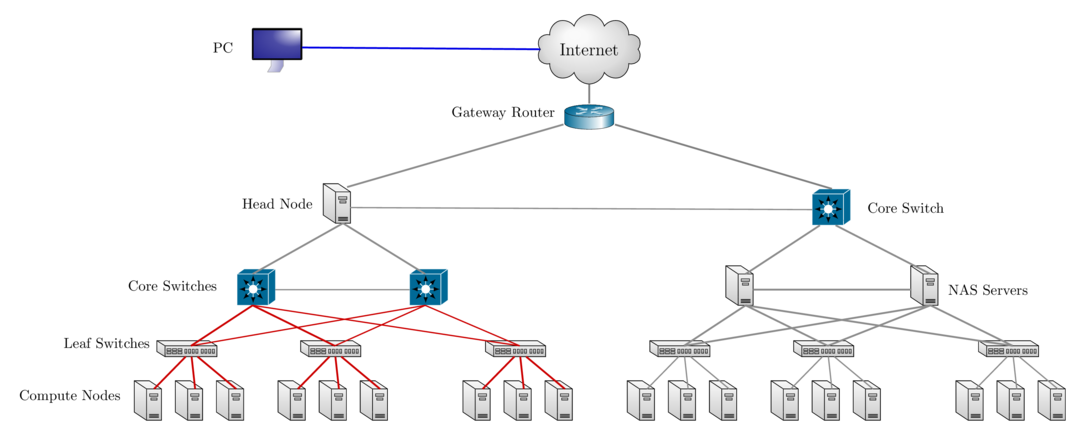

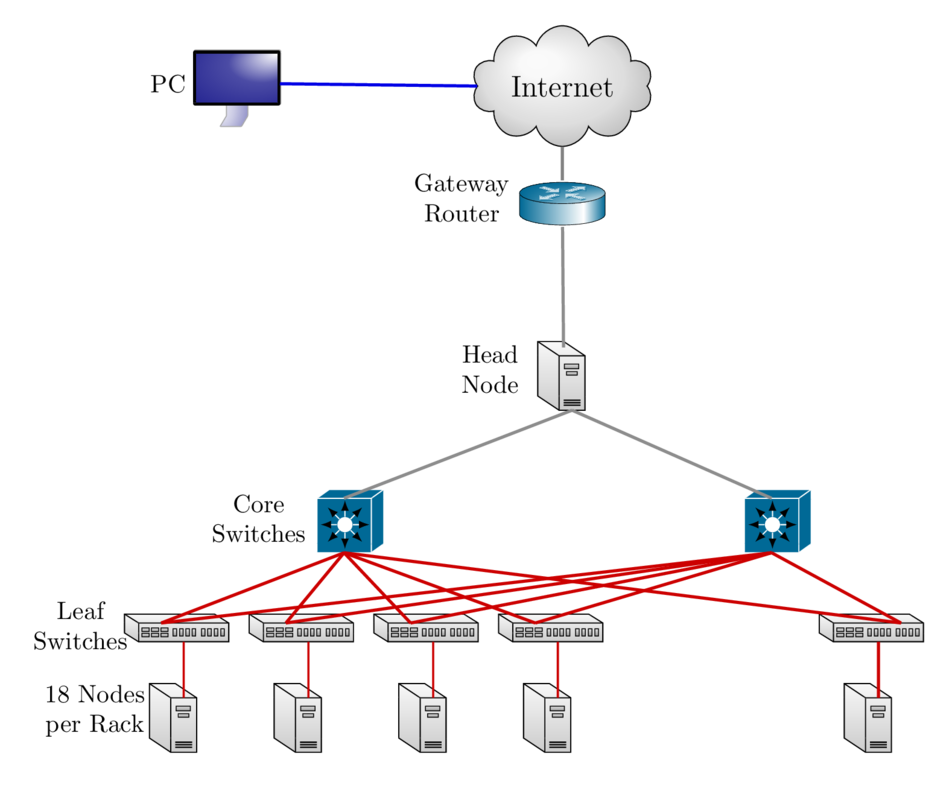

Network Layout Sol & Ceph Storage Cluster

How do I get started using HPC resources?

- Login to sol:

ssh -X username@sol.cc.lehigh.edu- You should see something like

[alp514@sol ~]$if you are logged into sol - This is known as the command prompt

- sol is the head/login and storage node for the monocacy cluster.

- Do not run computation on this node.

- Editing files, compiling code are acceptable computation on this node.

- Running intense computation on this node causes a high load on the storage that would cause other users jobs to run slow.

- All compute nodes are labelled as sol-[a-e][1-6][01-18]

- [a-e] for each distinct type of node

- [1-6] is the rack number in the HPC row

- [01-18] is the location in the rack with 01 at the bottom and 18 at the top

- You should see something like

Available Software

- Commercial, Free and Open source software is installed on

- Software is managed using module environment

- Why? We may have different versions of same software or software built with different compilers

- Module environment allows you to dynamically change your *nix environment based on software being used

- Standard on many University and national High Performance Computing resource since circa 2011

- How to use Sol/Maia Software on your linux workstation

- LTS provides licensed and open source software for Windows, Mac and Linux and Gogs, a self hosted Git Service or Github clone

Module Command

| Command | Description |

|---|---|

module avail |

show list of software available on resource |

module load abc |

add software abc to your environment (modify your PATH, LD_LIBRARY_PATH etc as needed) |

module unload abc |

remove abc from your environment |

module swap abc1 abc2 |

swap abc1 with abc2 in your environment |

module purge |

remove all modules from your environment |

module show abc |

display what variables are added or modified in your environment |

module help abc |

display help message for the module abc |

- Users who prefer not to use the module environment will need to modify their

.bashrc or .tcshrc files. Run

module showfor list variables that need modified, appended or prepended

Installed Software

- Chemistry/Materials Science

- CPMD

- GAMESS

- Gaussian

- NWCHEM

- Quantum Espresso

- VASP (Restricted Access)

- Molecular Dynamics

- Desmond

- GROMACS

- LAMMPS

- NAMD

MPI enabled

- Computational Fluid Dynamics

- Abaqus

- Ansys

- Comsol

- OpenFOAM

- OpenSees

- Math

- GNU Octave

- Magma

- Maple

- Mathematica

- Matlab

More Software

Machine & Deep Learning

- TensorFlow

- Caffe

- SciKit-Learn

- SciKit-Image

- Theano

- Keras

Natural Language Processing (NLP)

- Natural Language Toolkit (NLTK)

- Stanford NLP

- Bioinformatics

- BamTools

- BayeScan

- bgc

- BWA

- FreeBayes

- SAMTools

- tabix

- trimmomatic

- barcode_splitter

- phyluce

- VelvetOptimiser

More Software

- Scripting Languages

- R

- Perl

- Python

- Compilers

- GNU

- Intel

- JAVA

- PGI

- CUDA

- Parallel Programming

- MVAPICH2

- OpenMPI

- Libraries

- BLAS/LAPACK/GSL/SCALAPACK

- Boost

- FFTW

- Intel MKL

- HDF5

- NetCDF

- METIS/PARMETIS

- PetSc

- QHull/QRupdate

- SuiteSparse

- SuperLU

More Software

- Visualization Tools

- Atomic Simulation Environment

- Avogadro

- GaussView

- GNUPlot

- PWGui

- PyMol

- RDKit

- VESTA

- VMD

- XCrySDen

- Other Tools

- Artleys Knitro

- CMake

- GNU Parallel

- Lmod

- Numba

- Scons

- SPACK

- MD Tools

- BioPython

- CCLib

- MDAnalysis

Using your own Software?

- You can always install a software in your home directory

- SPACK is an excellent package manager that can even create module files

Stay compliant with software licensing- Modify your .bashrc/.tcshrc to add software to your path, OR

- create a module and dynamically load it so that it doesn't interfere

with other software installed on the system

- e.g. You might want to use openmpi instead of mvapich2

- the system admin may not want install it system wide for just one user

- Add the directory where you will install the module files to the variable MODULEPATH in .bashrc/.tcshrc

# My .bashrc file

export MODULEPATH=${MODULEPATH}:/home/alp514/modulefiles

Compilers

- Various versions of compilers installed on Sol

- Open Source: GNU Compiler (also called gcc even though gcc is the c compiler)

- 4.8.5 (system default), 5.3.0, 6.1.0 and 7.1.0

- Commercial: Only two seats of each

- Intel Compiler: 16.0.3, 17.0.0, 17.0.3 and 18.0.1

- Portland Group or PGI: 16.5, 16.10, 17.4, 17.7 and 18.3

- We are licensed to install any available version

- On Sol, all except gcc 4.8.5 are available via the module environment

| Language | GNU | Intel | PGI |

|---|---|---|---|

| Fortran | gfortran |

ifort |

pgfortran |

| C | gcc |

icc |

pgcc |

| C++ | g++ |

icpc |

pgc++ |

Compiling Code

- Usage:

<compiler> <options> <source code> - Example:

ifort -o saxpyf saxpy.f90gcc -o saxpyc saxpy.c

- Common Compiler options or flags

-o myexec: compile code and create an executable myexec, default executable isa.out.-l{libname}: link compiled code to a library called libname.-L{directory path}: directory to search for libraries.-I{directory path}: directory to search for include files and fortran modules.-L/share/Apps/gsl/2.1/intel-16.0.3/lib -lgsl -I/share/Apps/gsl/2.1/intel-16.0.3/include

- target Sandybridge and later processors for optimization using unified binary

- Intel:

-axCORE-AVX512,CORE-AVX2,CORE-AVX-I,AVX - PGI:

-tp sandybridge,haswell,skylake - GNU: cannot create unified binary targeting multiple architectures

- Intel:

Compiling and Running Serial Codes

[2018-02-22 08:47.27] ~/Workshop/2017XSEDEBootCamp/OpenMP

[alp514.sol-d118](842): icc -o laplacec laplace_serial.c

[2018-02-22 08:47.46] ~/Workshop/2017XSEDEBootCamp/OpenMP

[alp514.sol-d118](843): ./laplacec

Maximum iterations [100-4000]?

1000

---------- Iteration number: 100 ------------

[995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67

---------- Iteration number: 200 ------------

[995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28

---------- Iteration number: 300 ------------

[995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49

---------- Iteration number: 400 ------------

[995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59

---------- Iteration number: 500 ------------

[995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66

---------- Iteration number: 600 ------------

[995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70

---------- Iteration number: 700 ------------

[995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73

---------- Iteration number: 800 ------------

[995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75

---------- Iteration number: 900 ------------

[995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77

---------- Iteration number: 1000 ------------

[995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78

Max error at iteration 1000 was 0.034767

Total time was 4.099030 seconds.

Compilers for Parallel Programming: OpenMP & TBB

- OpenMP support is built-in

| Compiler | OpenMP Flag | TBB Flag |

|---|---|---|

| GNU | -fopenmp |

-L$TBBROOT/lib/intel64_lin/gcc4.4 -ltbb |

| Intel | -qopenmp |

-L$TBBROOT/lib/intel64_lin/gcc4.4 -ltbb |

| PGI | -mp |

- TBB is available as part of Intel Compiler suite

$TBBROOTdepends on the Intel Compiler Suite you want to use.- Not sure if this will work for PGI Compilers

[alp514.sol](1083): module show intel

-------------------------------------------------------------------

/share/Apps/share/Modules/modulefiles/toolchain/intel/16.0.3:

module-whatis Set up Intel 16.0.3 compilers.

conflict pgi

conflict gcc

setenv INTEL_LICENSE_FILE /share/Apps/intel/licenses/server.lic

setenv IPPROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/ipp

setenv MKLROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/mkl

setenv TBBROOT /share/Apps/intel/compilers_and_libraries_2016.3.210/linux/tbb

...

snip

...

Compiling and Running OpenMP Codes

[2018-02-22 08:47.56] ~/Workshop/2017XSEDEBootCamp/OpenMP/Solutions

[alp514.sol-d118](845): icc -qopenmp -o laplacec laplace_omp.c

[2018-02-22 08:48.09] ~/Workshop/2017XSEDEBootCamp/OpenMP/Solutions

[alp514.sol-d118](846): OMP_NUM_THREADS=4 ./laplacec

Maximum iterations [100-4000]?

1000

---------- Iteration number: 100 ------------

[995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67

---------- Iteration number: 200 ------------

[995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28

---------- Iteration number: 300 ------------

[995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49

---------- Iteration number: 400 ------------

[995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59

---------- Iteration number: 500 ------------

[995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66

---------- Iteration number: 600 ------------

[995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70

---------- Iteration number: 700 ------------

[995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73

---------- Iteration number: 800 ------------

[995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75

---------- Iteration number: 900 ------------

[995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77

---------- Iteration number: 1000 ------------

[995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78

Max error at iteration 1000 was 0.034767

Total time was 2.459961 seconds.

Compilers for Parallel Programming: MPI

- MPI is a library and not a compiler, built or compiled for different compilers.

| Language | Compile Command |

|---|---|

| Fortran | mpif90 |

| C | mpicc |

| C++ | mpicxx |

- Usage:

<compiler> <options> <source code>

[2017-10-30 08:40.30] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions

[alp514.sol](1096): mpif90 -o laplace_f90 laplace_mpi.f90

[2017-10-30 08:40.45] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions

[alp514.sol](1097): mpicc -o laplace_c laplace_mpi.c

[2017-10-30 08:40.57] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions

- The MPI compiler command is just a wrapper around the underlying compiler

[alp514.sol](1080): mpif90 -show

ifort -fPIC -I/share/Apps/mvapich2/2.1/intel-16.0.3/include

-I/share/Apps/mvapich2/2.1/intel-16.0.3/include

-L/share/Apps/mvapich2/2.1/intel-16.0.3/lib

-lmpifort -Wl,-rpath -Wl,/share/Apps/mvapich2/2.1/intel-16.0.3/lib

-Wl,--enable-new-dtags -lmpi

MPI Libraries

- There are two different MPI implementations commonly used

MPICH: Developed by Argonned National Laboratory- used as a starting point for various commercial and open source MPI libraries

MVAPICH2: Developed by D. K. Panda with support for InfiniBand, iWARP, RoCE, and Intel Omni-Path. (default MPI on Sol)Intel MPI: Intel's version of MPI. You need this for Xeon Phi MICs.- available in cluster edition of Intel Compiler Suite. Not available at Lehigh

IBM MPIfor IBM BlueGene andCRAY MPIfor Cray systems

OpenMPI: A Free, Open Source implementation from merger of three well know MPI implementations. Can be used for commodity network as well as high speed networkFT-MPIfrom the University of TennesseeLA-MPIfrom Los Alamos National LaboratoryLAM/MPIfrom Indiana University

Running MPI Programs

- Every MPI implementation come with their own job launcher:

mpiexec(MPICH,OpenMPI & MVAPICH2),mpirun(OpenMPI) ormpirun_rsh(MVAPICH2) - Example:

mpiexec [options] <program name> [program options] - Required options: number of processes and list of hosts on which to run program

| Option Description | mpiexec | mpirun | mpirun_rsh |

|---|---|---|---|

run on x cores |

-n x | -np x | -n x |

| location of the hostfile | -f filename | -machinefile filename | -hostfile filename |

- To run a MPI code, you need to use the launcher from the same implementation that was used to compile the code.

- For e.g.: You cannot compile code with OpenMPI and run using the MPICH and MVAPICH2's launcher

- Since MVAPICH2 is based on MPICH, you can launch MVAPICH2 compiled code using MPICH's launcher.

- SLURM scheduler provides

srunas a wrapper around all mpi launchers

Compiling and Running MPI Codes

[2018-02-22 08:48.27] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions

[alp514.sol-d118](848): mpicc -o laplacec laplace_mpi.c

[2018-02-22 08:48.41] ~/Workshop/2017XSEDEBootCamp/MPI/Solutions

[alp514.sol-d118](849): mpiexec -n 4 ./laplacec

Maximum iterations [100-4000]?

1000

---------- Iteration number: 100 ------------

[995,995]: 63.33 [996,996]: 72.67 [997,997]: 81.40 [998,998]: 88.97 [999,999]: 94.86 [1000,1000]: 98.67

---------- Iteration number: 200 ------------

[995,995]: 79.11 [996,996]: 84.86 [997,997]: 89.91 [998,998]: 94.10 [999,999]: 97.26 [1000,1000]: 99.28

---------- Iteration number: 300 ------------

[995,995]: 85.25 [996,996]: 89.39 [997,997]: 92.96 [998,998]: 95.88 [999,999]: 98.07 [1000,1000]: 99.49

---------- Iteration number: 400 ------------

[995,995]: 88.50 [996,996]: 91.75 [997,997]: 94.52 [998,998]: 96.78 [999,999]: 98.48 [1000,1000]: 99.59

---------- Iteration number: 500 ------------

[995,995]: 90.52 [996,996]: 93.19 [997,997]: 95.47 [998,998]: 97.33 [999,999]: 98.73 [1000,1000]: 99.66

---------- Iteration number: 600 ------------

[995,995]: 91.88 [996,996]: 94.17 [997,997]: 96.11 [998,998]: 97.69 [999,999]: 98.89 [1000,1000]: 99.70

---------- Iteration number: 700 ------------

[995,995]: 92.87 [996,996]: 94.87 [997,997]: 96.57 [998,998]: 97.95 [999,999]: 99.01 [1000,1000]: 99.73

---------- Iteration number: 800 ------------

[995,995]: 93.62 [996,996]: 95.40 [997,997]: 96.91 [998,998]: 98.15 [999,999]: 99.10 [1000,1000]: 99.75

---------- Iteration number: 900 ------------

[995,995]: 94.21 [996,996]: 95.81 [997,997]: 97.18 [998,998]: 98.30 [999,999]: 99.17 [1000,1000]: 99.77

---------- Iteration number: 1000 ------------

[995,995]: 94.68 [996,996]: 96.15 [997,997]: 97.40 [998,998]: 98.42 [999,999]: 99.22 [1000,1000]: 99.78

Max error at iteration 1000 was 0.034767

Total time was 1.030180 seconds.

Cluster Environment

A cluster is a group of computers (nodes) that works together closely

Two types of nodes

- Head/Login Node

- Compute Node

Multi-user environment

Each user may have multiple jobs running simultaneously

How to run jobs

- All compute intensive jobs are scheduled

- Write a script to submit jobs to a scheduler

- need to have some background in shell scripting (bash/tcsh)

- Need to specify

- Resources required (which depends on configuration)

- number of nodes

- number of processes per node

- memory per node

- How long do you want the resources

- have an estimate for how long your job will run

- Which queue to submit jobs

- SLURM uses the term partition instead of queue

- Resources required (which depends on configuration)

Scheduler & Resource Management

A software that manages resources (CPU time, memory, etc) and schedules job execution

- Sol: Simple Linux Utility for Resource Management (SLURM)

- Others: Portable Batch System (PBS)

- Scheduler: Maui

- Resource Manager: Torque

- Allocation Manager: Gold

A job can be considered as a user’s request to use a certain amount of resources for a certain amount of time

The Scheduler or queuing system determines

- The order jobs are executed

- On which node(s) jobs are executed

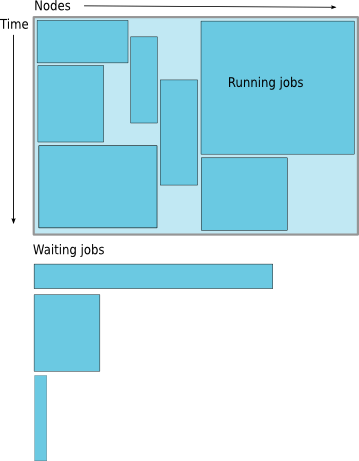

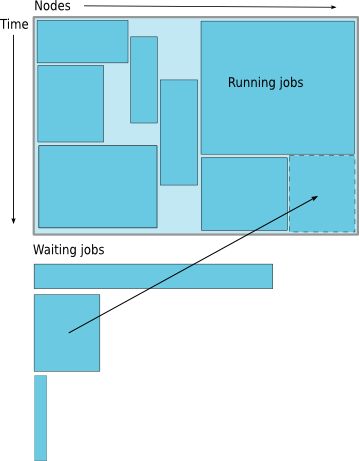

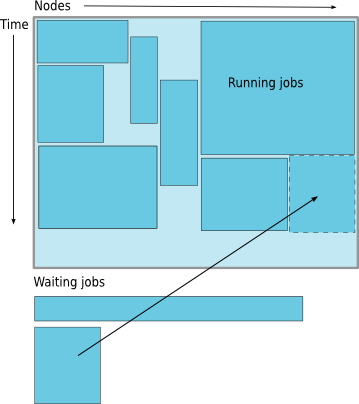

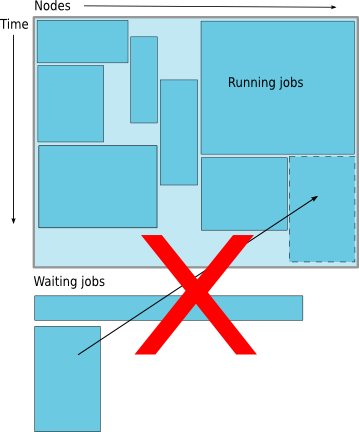

Job Scheduling

Map jobs onto the node-time space

- Assuming CPU time is the only resource

Need to find a balance between

- Honoring the order in which jobs are received

- Maximizing resource utilization

Backfilling

- A strategy to improve utilization

- Allow a job to jump ahead of others when there are enough idle nodes

- Must not affect the estimated start time of the job with the highest priority

How much time must I request

- Ask for an amount of time that is

- Long enough for your job to complete

- As short as possible to increase the chance of backfilling

Available Queues

- Sol

| Partition Name | Max Runtime in hours | Max SU consumed node per hour |

|---|---|---|

| lts | 72 | 20 |

| imlab | 48 | 22 |

| imlab-gpu | 48 | 24 |

| eng | 72 | 22 |

| eng-gpu | 72 | 24 |

| engc | 72 | 24 |

| himem | 72 | 48 |

| enge | 72 | 36 |

| engi | 72 | 36 |

- Maia

| Queue Name | Max Runtime in hours | Max Simultaneous Core-hours |

|---|---|---|

| smp-test | 1 | 4 |

| smp | 96 | 384 |

How much memory can or should I use per core?

The amount of installed memory less the amount that is used by the operating system and other utilities

A general rule of thumb on most HPC resources: leave 1-2GB for the OS to run.

Sol

| Partition | Max Memory/core (GB) | Recommended Memory/Core (GB) |

|---|---|---|

| lts | 6.4 | 6.2 |

| eng/imlab/imlab-gpu/enge/engi | 5.3 | 5.1 |

| engc | 2.66 | 2.4 |

| himem | 32 | 31.5 |

if you need to run a single core job that requires 10GB memory in the imlab partition, you need to request 2 cores even though you are only using 1 core.

Maia: Users need to specify memory required in their submit script. Max memory that should be requested is 126GB.

Basic Job Manager Commands

- Submission

- Monitoring

- Manipulating

- Reporting

Job Types

- Interactive Jobs

- Set up an interactive environment on compute nodes for users

- Will log you into a compute node and wait for your prompt

- Purpose: testing and debugging code. Do not run jobs on head node!!!

- All compute node have a naming convention sol-[a,b,c,d,e]###

- head node is sol

- Batch Jobs

- Executed using a batch script without user intervention

- Advantage: system takes care of running the job

- Disadvantage: cannot change sequence of commands after submission

- Useful for Production runs

- Workflow: write a script -> submit script -> take mini vacation -> analyze results

- Executed using a batch script without user intervention

Useful SLURM Directives

| SLURM Directive | Description |

|---|---|

| --partition=queuename | Submit job to the queuename partition. |

| --time=hh:mm:ss | Request resources to run job for hh hours, mm minutes and ss seconds. |

| --nodes=m | Request resources to run job on m nodes. |

| --ntasks-per-node=n | Request resources to run job on n processors on each node requested. |

| --ntasks=n | Request resources to run job on a total of n processors. |

| --job-name=jobname | Provide a name, jobname to your job. |

| --output=filename.out | Write SLURM standard output to file filename.out. |

| --error=filename.err | Write SLURM standard error to file filename.err. |

| --mail-type=events | Send an email after job status events is reached. |

| events can be NONE, BEGIN, END, FAIL, REQUEUE, ALL, TIME_LIMIT(_90,80) | |

| --mail-user=address | Address to send email. |

| --account=mypi | charge job to the mypi account |

Useful SLURM Directives (contd)

| SLURM Directive | Description |

|---|---|

| --qos=nogpu | Request a quality of service (qos) for the job in imlab, engc partitions. |

| Job will remain in queue indefinitely if you do not specify qos | |

| --gres=gpu:# | Specifies number of gpus requested in the gpu partitions |

| You can request 1 or 2 gpus with a minimum of 1 core or cpu per gpu |

- SLURM can also take short hand notation for the directives

| Long Form | Short Form |

|---|---|

| --partition=queuename | -p queuename |

| --time=hh:mm:ss | -t hh:mm:ss |

| --nodes=m | -N m |

| --ntasks=n | -n n |

| --account=mypi | -A mypi |

| --job-name=jobname | -J jobname |

| --output=filename.out | -o filename.out |

SLURM Filename Patterns

- sbatch allows for a filename pattern to contain one or more replacement symbols, which are a percent sign "%" followed by a letter (e.g. %j).

| Pattern | Description |

|---|---|

| %A | Job array's master job allocation number. |

| %a | Job array ID (index) number. |

| %J | jobid.stepid of the running job. (e.g. "128.0") |

| %j | jobid of the running job. |

| %N | short hostname. This will create a separate IO file per node. |

| %n | Node identifier relative to current job (e.g. "0" is the first node of the running job) This will create a separate IO file per node. |

| %s | stepid of the running job. |

| %t | task identifier (rank) relative to current job. This will create a separate IO file per task. |

| %u | User name. |

| %x | Job name. |

Useful PBS Directives

| PBS Directive | Description |

|---|---|

| -q queuename | Submit job to the queuename queue. |

| -l walltime=hh:mm:ss | Request resources to run job for hh hours, mm minutes and ss seconds. |

| -l nodes=m:ppn=n | Request resources to run job on n processors each on m nodes. |

| -l mem=xGB | Request xGB per node requested, applicable on Maia only |

| -N jobname | Provide a name, jobname to your job. |

| -o filename.out | Write PBS standard output to file filename.out. |

| -e filename.err | Write PBS standard error to file filename.err. |

| -j oe | Combine PBS standard output and error to the same file. |

| -M your email address | Address to send email. |

| -m status | Send an email after job status status is reached. |

| status can be a (abort), b (begin) or e (end). The arguments can be combined | |

| for e.g. abe will send email when job begins and either aborts or ends |

Useful PBS/SLURM environmental variables

| SLURM Command | Description | PBS Command |

|---|---|---|

| SLURM_SUBMIT_DIR | Directory where the qsub command was executed |

PBS_O_WORKDIR |

| SLURM_JOB_NODELIST | Name of the file that contains a list of the HOSTS provided for the job | PBS_NODEFILE |

| SLURM_NTASKS | Total number of cores for job | PBS_NP |

| SLURM_JOBID | Job ID number given to this job | PBS_JOBID |

| SLURM_JOB_PARTITION | Queue job is running in | PBS_QUEUE |

| Walltime in secs requested | PBS_WALLTIME | |

| Name of the job. This can be set using the -N option in the PBS script | PBS_JOBNAME | |

| Indicates job type, PBS_BATCH or PBS_INTERACTIVE | PBS_ENVIRONMENT | |

| value of the SHELL variable in the environment in which qsub was executed | PBS_O_SHELL | |

| Home directory of the user running qsub | PBS_O_HOME |

Job Types: Interactive

PBS: Use

qsub -Icommand with PBS Directives-

qsub -I -V -l walltime=<hh:mm:ss>,nodes=<# of nodes>:ppn=<# of core/node> -q <queue name>

-

SLURM: Use

sruncommand with SLURM Directives followed by--pty /bin/bash --loginsrun --time=<hh:mm:ss> --nodes=<# of nodes> --ntasks-per-node=<# of core/node> -p <queue name> --pty /bin/bash --login- If you have

soltoolsmodule loaded, then useinteractwith at least one SLURM Directiveinteract -t 20[Assumes-p lts -n 1 -N 20]

Run a job interactively replace

--pty /bin/bash --loginwith the appropriate command.- For e.g.

srun -t 20 -n 1 -p imlab --qos=nogpu $(which lammps) -in in.lj -var x 1 -var n 1 - Default values are 3 days, 1 node, 20 tasks per node and lts partition

- For e.g.

Job Types: Batch

- Workflow: write a script -> submit script -> take mini vacation -> analyze results

- Batch scripts are written in bash, tcsh, csh or sh

- ksh scripts will work if ksh is installed

- Add PBS or SLURM directives after the shebang line but before any shell

commands

- SLURM:

#SBATCH DIRECTIVES - PBS:

#PBS DIRECTIVES

- SLURM:

Submitting Batch Jobs

- PBS:

qsub filename - SLURM:

sbatch filename

- PBS:

qsubandsbatchcan take the options for#PBSand#SBATCHas command line argumentsqsub -l walltime=1:00:00,nodes=1:ppn=16 -q normal filenamesbatch --time=1:00:00 --nodes=1 --ntasks-per-node=20 -p lts filename

Minimal submit script for Serial Jobs

#!/bin/bash

#PBS -q smp

#PBS -l walltime=1:00:00

#PBS -l nodes=1:ppn=1

#PBS -l mem=4GB

#PBS -N myjob

cd ${PBS_O_WORKDIR}

./myjob < filename.in > filename.out

#!/bin/bash

#SBATCH --partition=lts

#SBATCH --time=1:00:00

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=1

#SBATCH --job-name myjob

cd ${SLURM_SUBMIT_DIR}

./myjob < filename.in > filename.out

Minimal submit script for MPI Job

#!/bin/bash

#SBATCH --partition=lts

#SBATCH --time=1:00:00

#SBATCH --nodes=2

#SBATCH --ntasks-per-node=20

## For --partition=imlab,

### use --ntasks-per-node=22

### and --qos=nogpu

#SBATCH --job-name myjob

module load mvapich2

cd ${SLURM_SUBMIT_DIR}

srun ./myjob < filename.in > filename.out

exit

Minimal submit script for OpenMP Job

#!/bin/tcsh

#SBATCH --partition=imlab

# Directives can be combined on one line

#SBATCH --time=1:00:00 --nodes=1 --ntasks-per-node=22

#SBATCH --qos=nogpu

#SBATCH --job-name myjob

cd ${SLURM_SUBMIT_DIR}

# Use either

setenv OMP_NUM_THREADS 22

./myjob < filename.in > filename.out

# OR

OMP_NUM_THREADS=22 ./myjob < filename.in > filename.out

exit

Minimal submit script for LAMMPS GPU job

#!/bin/tcsh

#SBATCH --partition=imlab

# Directives can be combined on one line

#SBATCH --time=1:00:00

#SBATCH --nodes=1

# 1 CPU can be be paired with only 1 GPU

# 1 GPU can be paired with all 24 CPUs

#SBATCH --ntasks-per-node=1

#SBATCH --gres=gpu:1

# Need both GPUs, use --gres=gpu:2

#SBATCH --job-name myjob

cd ${SLURM_SUBMIT_DIR}

# Load LAMMPS Module

module load lammps/17nov16-gpu

# Run LAMMPS for input file in.lj

srun $(which lammps) -in in.lj -sf gpu -pk gpu 1 gpuID ${CUDA_VISIBLE_DEVICE}

exit

Need to run multiple jobs in sequence?

- Option 1: Submit jobs as soon as previous jobs complete

Option 2: Submit jobs with a dependency

You want to run several serial processor jobs on

- one node: your submit script should be able to run several serial

jobs in background and then use the

waitcommand for all jobs to finish - more than one node: this requires some background in scripting but the idea is the same as above

- one node: your submit script should be able to run several serial

jobs in background and then use the

Monitoring & Manipulating Jobs

| SLURM Command | Description | PBS Command |

|---|---|---|

| squeue | check job status (all jobs) | qstat |

| squeue -u username | check job status of user username | qstat -u username |

| squeue --start | Show estimated start time of jobs in queue | showstart jobid |

| scontrol show job jobid | Check status of your job identified by jobid | checkjob jobid |

| scancel jobid | Cancel your job identified by jobid | qdel jobid |

| scontrol hold jobid | Put your job identified by jobid on hold | qhold jobid |

| scontrol release jobid | Release the hold that you put on jobid | qrls jobid |

- The following scripts written by RC staff can also be used for monitoring

jobs.

- checkq:

squeuewith additional useful option. - checkload:

sinfowith additional options to show load on compute nodes.

- checkq:

- load the

soltoolsmodule to get access to RC staff created scripts

Modifying Resources for Queued Jobs

- Modify a job after submission but before starting:

scontrol update SPECIFICATION jobid=<jobid>

- Examples of

SPECIFICATIONare- add dependency after a job has been submitted:

dependency=<attributes> - change job name:

jobname=<name> - change partition:

partition=<name> - modify requested runtime:

timelimit=<hh:mm:ss> - change quality of service (when changing to imlab/engc):

qos=nogpu - request gpus (when changing to one of the gpu partitions):

gres=gpu:<1 or 2>

- add dependency after a job has been submitted:

- SPECIFICATIONs can be combined for e.g. command to move a queued job to

imlabpartition and change timelimit to 48 hours for a job 123456 isscontrol update partition=imlab qos=nogpu timelimit=48:00:00 jobid=123456

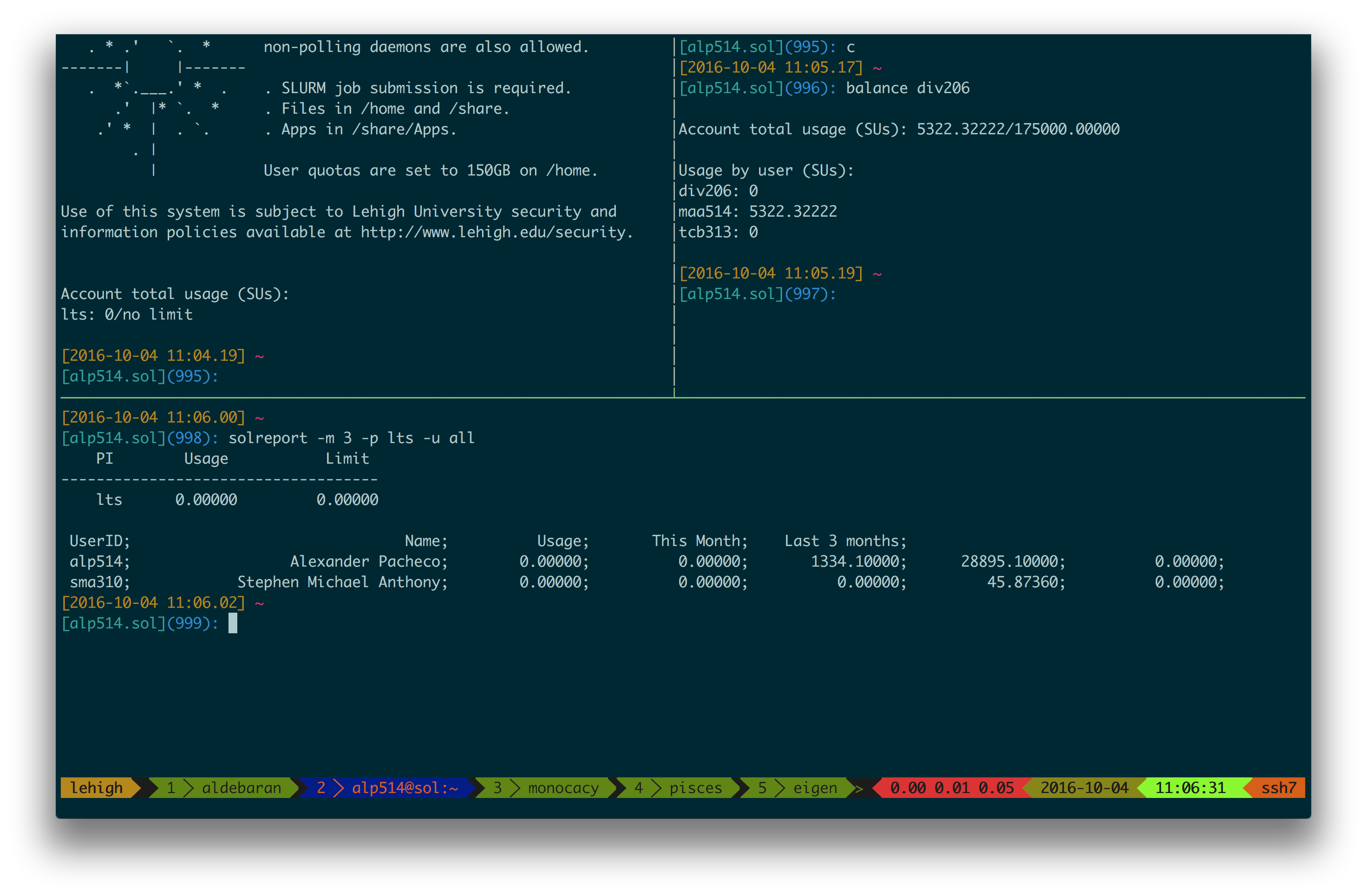

Usage Reporting

- sacct: displays accounting data for all jobs and job steps in the SLURM job accounting log or Slurm database

sshare: Tool for listing the shares of associations to a cluster.

We have created scripts based on these to provide usage reporting

-

alloc_summary.sh- included in your .bash_profile

- prints allocation usage on your login shell

-

balance- prints allocation usage summary

-

solreport- obtain your monthly usage report

- PIs can obtain usage report for all or specific users on their allocation

- use

--helpfor usage information

-

Usage Reporting

Online Usage Reporting

- Monthly usage summary (updated daily)

- Scheduler Status (updated every 15 mins)

- Annual Usage Reports:

- Usage reports restricted to Lehigh IPs

Additional Help & Information

- Issue with running jobs or need help to get started:

- Open a help ticket: http://go.lehigh.edu/rchelp

- More Information

- Subscribe

- HPC Training Google Groups: hpctraining-list+subscribe@lehigh.edu

- Research Computing Mailing List: https://lists.lehigh.edu/mailman/listinfo/hpc-l

- My contact info

- eMail: alp514@lehigh.edu

- Tel: (610) 758-6735

- Location: Room 296, EWFM Computing Center

- My Schedule